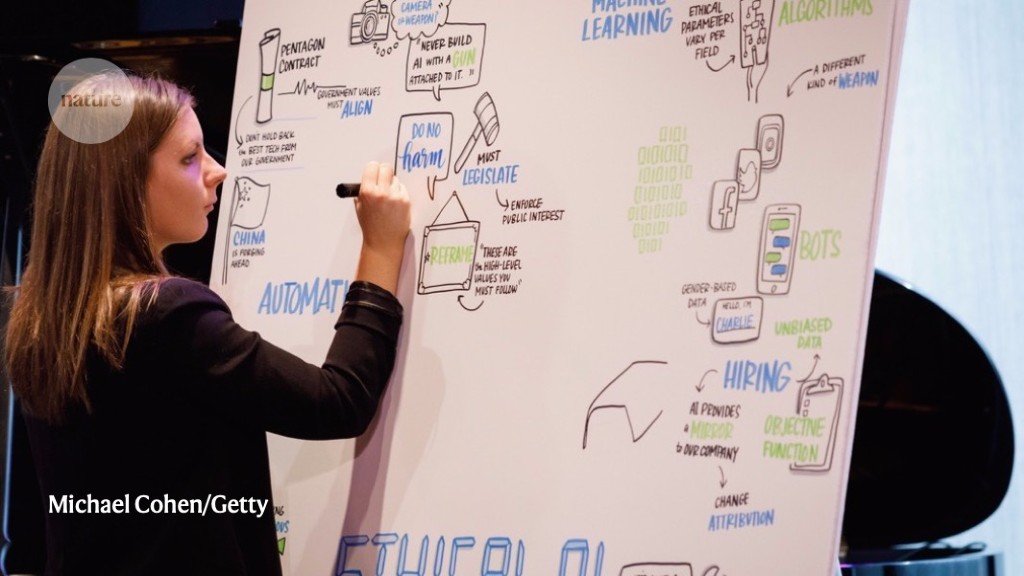

ArticlePublished:1st Jan 2018We survey eight research areas organized around one question: As learning systems become increasingly intelligent and autonomous, what design principles can best ensure that their behavior is aligned with the interests of the operators? We focus on two major technical obstacles to AI alignment: the … ArticlePublished:26th Sep 2023A snapshot of what happened this past year in AI research, education, policy, hiring, and more. ArticlePublished:27th Jun 2022How could machines learn as efficiently as humans and animals; learn to reason and plan; learn representations of percepts and action plans at multiple levels of abstraction, enabling them to reason, predict, and plan at multiple time horizons? ArticlePublished:7th Aug 2021The development of Artificial General Intelligence (AGI) promises to be a major event. Along with its many potential benefits, it also raises serious safety concerns (Bostrom, 2014). The intention of this paper is to provide an easily accessible and up-to-date collection of references for the emerging field of AGI safety. A significant number of safety problems for AGI have been identified. We list these, and survey recent research on solving them. We also cover works on how best to think of AGI from the limited knowledge we have today, predictions for when AGI will first be created, and what will happen after its creation. Finally, we review the current public policy on AGI. ArticlePublished:25th Jun 2021Humans have struggled to make truly intelligent machines. Maybe we need to let them get on with it themselves. ArticlePublished:3rd Nov 2020Thirty years ago, Hinton’s belief in neural networks was contrarian. Now it’s hard to find anyone who disagrees, he says. ArticlePublished:23rd Aug 2023A low-power chip that runs AI models using analog rather than digital computation shows comparable accuracy on speech-recognition tasks but is more than 14 times as energy efficient. ArticlePublished:19th Apr 2022This story is the introduction to MIT Technology Review’s series on AI colonialism, which was supported by the MIT Knight Science Journalism Fellowship Program and the Pulitzer Center. An investigation into how AI is enriching a powerful few. ArticlePublished:1st Mar 2022This year’s report shows that AI systems are starting to be deployed widely into the economy, but at the same time they are being deployed, the ethical issues associated with AI are becoming magnified. 103% more money was invested in the private investment of AI and AI-related startups in 2021 than in 2020 ($96.5 billion versus $46 billion). ArticlePublished:4th May 2021As described in a AAAS report released today, “the COVID-19 pandemic provided … a unique opportunity to prove that AI could be harnessed for the benefit of all humanity, and AI developers seized the moment.” ArticlePublished:16th Jun 2021A majority worries that the evolution of artificial intelligence by 2030 will continue to be primarily focused on optimizing profits and social control. Still, a portion celebrate coming AI breakthroughs that will improve life. ArticlePublished:28th Apr 2022Shobita Parthasarathy warns that software designed to summarize, translate and write like humans might exacerbate distrust in science. ArticlePublished:17th Feb 2022In the quest to make artificial intelligence that can reason and apply knowledge flexibly, many researchers are focused on fresh insights from neuroscience. Should they be looking to psychology too? ArticlePublished:27th Jun 2022The viral image generation app is good, absurd fun. It’s also giving the world an education in how artificial intelligence may warp reality. ArticlePublished:7th Aug 2021Is AI finally closing in on human intelligence? // 12.11.2020, Financial Times ArticlePublished:15th Jul 2020Physicist Max Tegmark wants to make artificial intelligence work for everyone. Here he waxes lyrical about cosmology, consciousness and why AI is like fire ArticlePublished:15th Mar 2023Current advances in Artificial Intelligence (AI) and Machine Learning have achieved unprecedented impact across research communities and industry. Nevertheless, concerns around trust, safety, interpretability and accountability of AI were raised by influential thinkers. Many identified the need for… ArticlePublished:24th Aug 2020The National Institute of Standards and Technology has issue a set of draft principles for “explainable” artificial intelligence and is accepting… ArticlePublished:22nd Sep 2022The future of artificial intelligence is neither utopian nor dystopian—it’s something much more interesting. ArticlePublished:22nd Jun 2022An international team of around 1,000 largely academic volunteers has tried to break big tech’s stranglehold on natural-language processing and reduce its harms. ArticlePublished:23rd Dec 2020For the first time, the organizers of NeurIPS required speakers to consider the societal impact of their work. ArticlePublished:7th Apr 2022Physicist Maria Schuld on implementing machine-learning systems on quantum computers. Kit Chapman explains how technologies developed to make racing cars faster and safer could help us combat climate change. ArticlePublished:7th Aug 2021Success in the quest for artificial intelligence has the potential to bring unprecedented benefits to humanity, and it is therefore worthwhile to inves- tigate how to maximize these benefits while avoiding potential pitfalls. This article gives numerous examples (which should by no means be construed as an exhaustive list) of such worthwhile research aimed at ensuring that AI remains robust and beneficial. ArticlePublished:7th Aug 2021This book on artificial intelligence covers multidisciplinary research, examines current research frontiers in AI/robotics, and likely impacts on societal well-being, human-robot relationships, as well as the opportunities and risks for sustainable development and peace.... ArticlePublished:7th Aug 2021The report captures key takeaways from various roundtable conversations, identifies the challenges and opportunities that different regions of the world face when dealing with emerging technologies, and evaluates China’s role as a global citizen. In times of economic decoupling and rising geopolitical bipolarity, it highlights opportunities for smart partnerships, describes how data and AI applications can be harnessed for good, and develops scenarios on where an AI-powered world might be headed. ArticlePublished:14th Sep 2022Deep neural networks are increasingly helping to design microchips, predict how proteins fold, and outperform people at complex games. However, new research suggests there are limitations to deep neural networks can and cannot do ArticlePublished:28th Jun 2019The State of AI Report analyses the most interesting developments in AI. Read and download here. ArticlePublished:14th Feb 2020Recent research in artificial intelligence and machine learning has largely emphasized general-purpose learning and ever-larger training sets and more and more compute. In contrast, I propose a hybrid, knowledge-driven, reasoning-based approach, centered around cognitive models, that could provide t… ArticlePublished:5th Nov 2020Five years from now, the field of AI will look very different than it does today. ArticlePublished:19th Feb 2021Five years from now, the field of AI will look very different than it does today. ArticlePublished:1st Dec 2020Scientists today have completely different ideas of what machines can learn to do than we had only 10 y ago. In image processing, speech and video processing, machine vision, natural language processing, and classic two-player games, in particular, the state-of-the-art has been rapidly pushed forwa… ArticlePublished:2nd Jun 2022Artificial intelligence approaches inspired by human cognitive function have usually single learned ability. The authors propose a multimodal foundation model that demonstrates the cross-domain learning and adaptation for broad range of downstream cognitive tasks. ArticlePublished:1st Mar 2023 The report highlights some of our most interesting observations, sketches connections and patterns, and asks what these might mean for the future of development. ArticlePublished:7th Aug 2021Since its beginning in the 1950s, the field of artificial intelligence has cycled several times between periods of optimistic predictions and massive investment (“AI spring”) and periods of disappointment, loss of confi- dence, and reduced funding (“AI winter”). Even with today’s seemingly fast pace of AI breakthroughs, the development of long-promised technologies such as self-driving cars, housekeeping robots, and conversational companions has turned out to be much harder than many people expected. One reason for these repeating cycles is our limited understanding of the nature and complexity of intelligence itself. In this paper I describe four fallacies in common assumptions made by AI researchers, which can lead to overconfident predictions about the field. I conclude by discussing the open questions spurred by these fallacies, including the age-old challenge of imbuing machines with humanlike common sense. | |||

1.1.1Deeper Machine Learning | |||

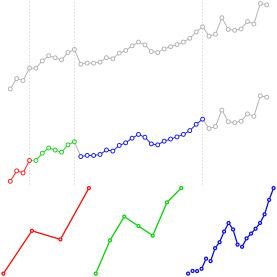

ArticlePublished:3rd Nov 2020Thirty years ago, Hinton’s belief in neural networks was contrarian. Now it’s hard to find anyone who disagrees, he says. ArticlePublished:1st Mar 2022This year’s report shows that AI systems are starting to be deployed widely into the economy, but at the same time they are being deployed, the ethical issues associated with AI are becoming magnified. 103% more money was invested in the private investment of AI and AI-related startups in 2021 than in 2020 ($96.5 billion versus $46 billion). ArticlePublished:7th Oct 2020Machine learning is helping biologists solve hard problems, including designing effective synthetic biology tools. Two teams from the Wyss Institute and MIT have created a set of algorithms that can effectively predict the efficacy of thousands of RNA-based sensors called toehold switches, allowing … ArticlePublished:27th Jun 2022The viral image generation app is good, absurd fun. It’s also giving the world an education in how artificial intelligence may warp reality. ArticlePublished:22nd Jun 2022Better data and new statistical techniques could enable researchers to measure the form of inequality that seems most harmful to society — inequality of opportunity. ArticlePublished:22nd Jun 2022An international team of around 1,000 largely academic volunteers has tried to break big tech’s stranglehold on natural-language processing and reduce its harms. ArticlePublished:14th Sep 2022Deep neural networks are increasingly helping to design microchips, predict how proteins fold, and outperform people at complex games. However, new research suggests there are limitations to deep neural networks can and cannot do ArticlePublished:1st Dec 2020Scientists today have completely different ideas of what machines can learn to do than we had only 10 y ago. In image processing, speech and video processing, machine vision, natural language processing, and classic two-player games, in particular, the state-of-the-art has been rapidly pushed forwa… | |||

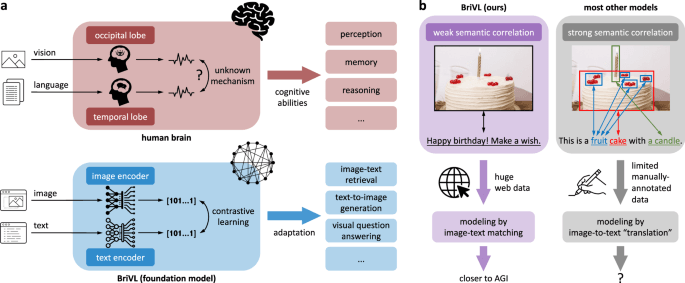

1.1.2Multimodal AI | |||

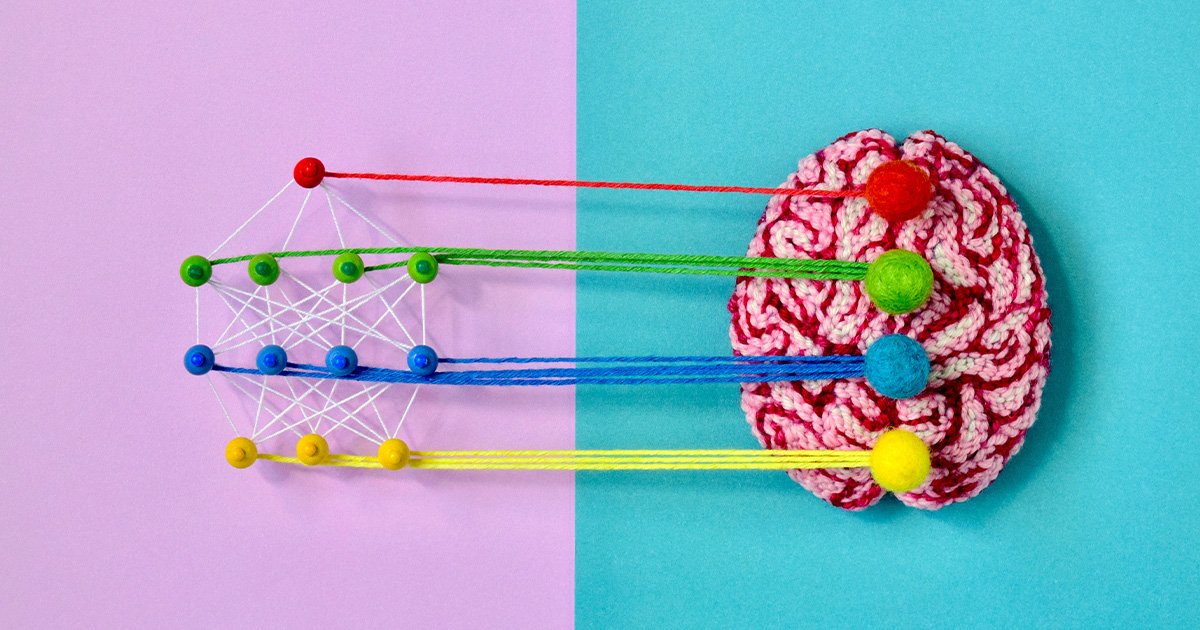

ArticlePublished:27th Jun 2022How could machines learn as efficiently as humans and animals; learn to reason and plan; learn representations of percepts and action plans at multiple levels of abstraction, enabling them to reason, predict, and plan at multiple time horizons? ArticlePublished:19th Apr 2022This story is the introduction to MIT Technology Review’s series on AI colonialism, which was supported by the MIT Knight Science Journalism Fellowship Program and the Pulitzer Center. An investigation into how AI is enriching a powerful few. ArticlePublished:28th Apr 2022Shobita Parthasarathy warns that software designed to summarize, translate and write like humans might exacerbate distrust in science. ArticlePublished:17th Feb 2022In the quest to make artificial intelligence that can reason and apply knowledge flexibly, many researchers are focused on fresh insights from neuroscience. Should they be looking to psychology too? ArticlePublished:27th Jun 2021InsideAI Weekend Commentary: The Problem Investing In AI BIas Solutions ArticlePublished:14th Feb 2020Recent research in artificial intelligence and machine learning has largely emphasized general-purpose learning and ever-larger training sets and more and more compute. In contrast, I propose a hybrid, knowledge-driven, reasoning-based approach, centered around cognitive models, that could provide t… ArticlePublished:24th Jun 2021Teaching robots to understand “why” could help them transfer their knowledge to other environments | |||

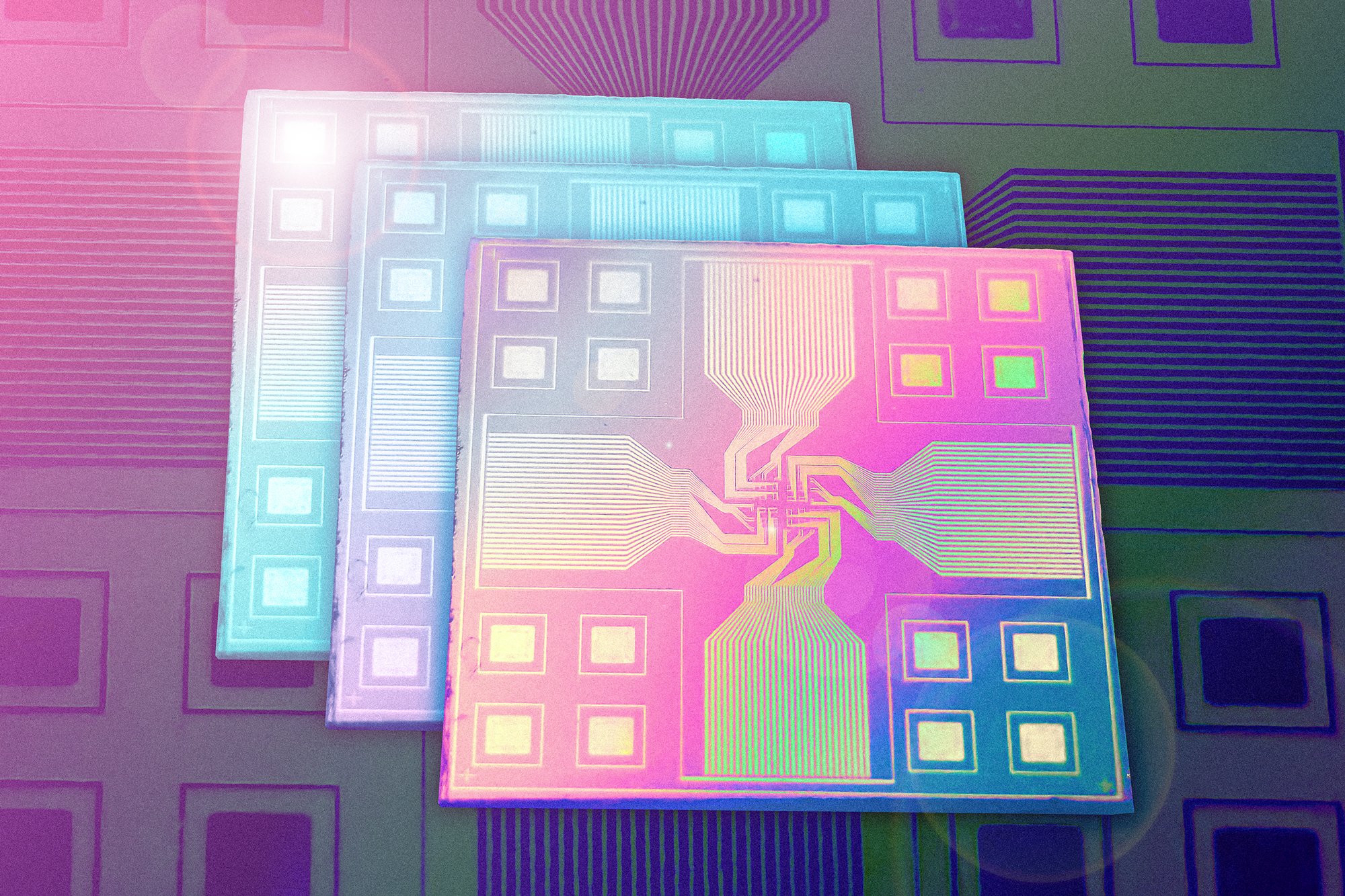

1.1.3 Intelligent devices | |||

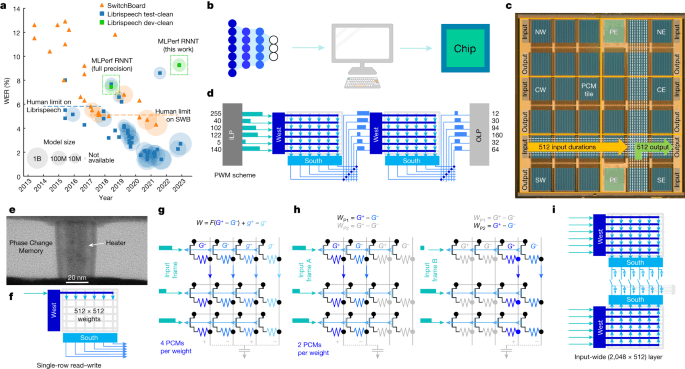

ArticlePublished:1st Jan 2018We survey eight research areas organized around one question: As learning systems become increasingly intelligent and autonomous, what design principles can best ensure that their behavior is aligned with the interests of the operators? We focus on two major technical obstacles to AI alignment: the … ArticlePublished:18th Jun 2021Computer scientists are questioning whether DeepMind will ever be able to make machines with the kind of “general” intelligence seen in humans and animals. ArticlePublished:22nd Sep 2022The future of artificial intelligence is neither utopian nor dystopian—it’s something much more interesting. ArticlePublished:2nd Jun 2022Artificial intelligence approaches inspired by human cognitive function have usually single learned ability. The authors propose a multimodal foundation model that demonstrates the cross-domain learning and adaptation for broad range of downstream cognitive tasks. | |||

1.1.4Alternative AI | |||

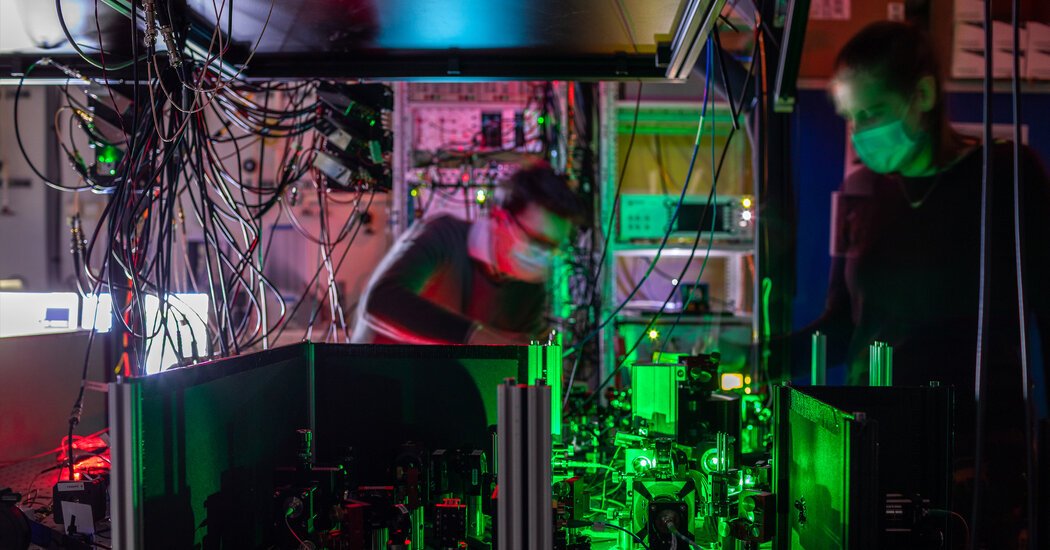

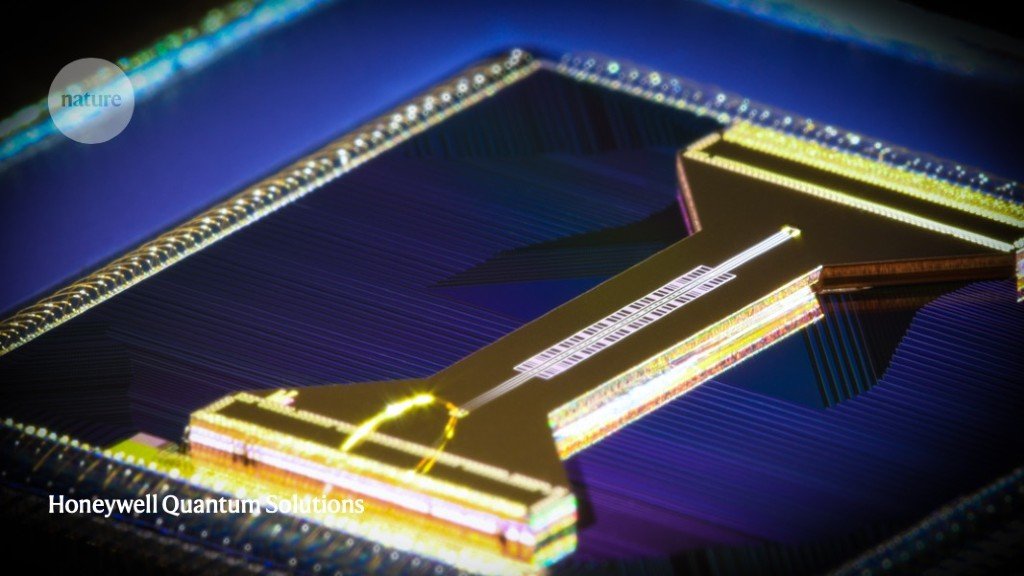

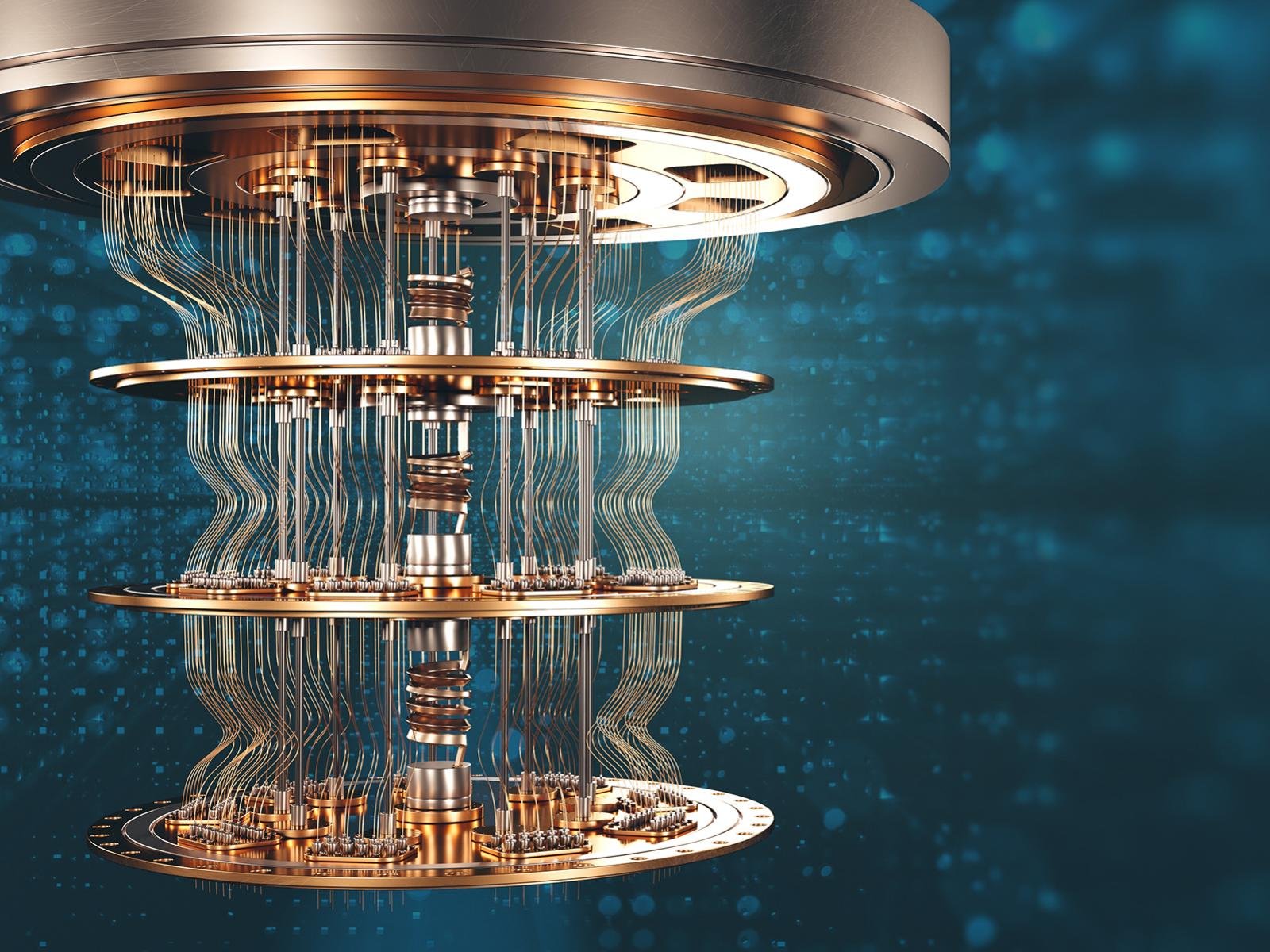

ArticlePublished:7th Apr 2022Physicist Maria Schuld on implementing machine-learning systems on quantum computers. Kit Chapman explains how technologies developed to make racing cars faster and safer could help us combat climate change. ArticlePublished:21st May 2021Hundreds of scientists around the world are working together to understand one of the most powerful emerging technologies before it’s too late. | |||

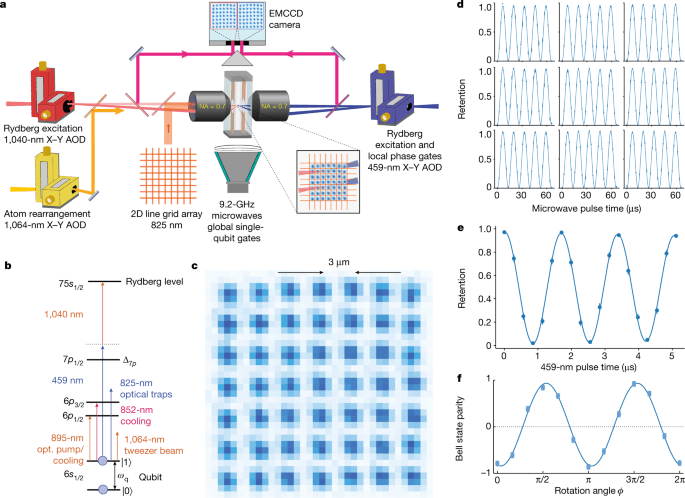

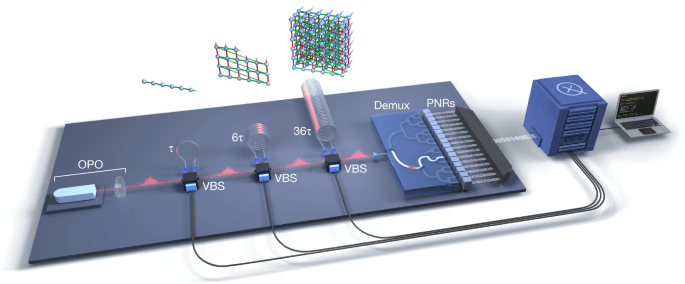

ArticlePublished:26th Sep 2023ArticlePublished:25th May 2022Scientists have improved their ability to send quantum information across distant computers — and have taken another step toward the network of the future. ArticlePublished:4th Jun 2022Quantum computing is very susceptible to noise and disruptive influences in the environment. This makes quantum computers “noisy,” since quantum bits, or qubits, lose information when they go out of sync, a process known as decoherence. ArticlePublished:8th May 2021A quantum-powered future is an increasingly likely scenario. ArticlePublished:17th Nov 2020Trapped-ion systems are gaining momentum in the quest to make a commercial quantum computer. ArticlePublished:1st Jun 2022In this podcast we speak to a molecular geneticist and a philosopher of science ArticlePublished:3rd Dec 2020Google trumpeted its quantum computer that outperformed a conventional supercomputer. A Chinese group says it’s done the same, with different technology. ArticlePublished:21st Sep 2022Scientists to develop a quantum computer dedicated to life sciences. Denmark's Novo Nordisk Foundation is to develop a practical quantum computer with applications ranging from creating new drugs to finding links between genes, environment and disease. ArticlePublished:2nd Jul 2023Quantum computers offer a new paradigm of computing with the potential to vastly outperform any imagineable classical computer. This has caused a gold rush towards new quantum algorithms and hardware. In light of the growing expectations and hype surrounding quantum computing we ask the question whi… ArticlePublished:10th Jan 2023Quantum computers promise to impact industrial applications, for which quantum chemical calculations are required, by virtue of their high accuracy. This perspective explores the challenges and opportunities of applying quantum computers to drug design, discusses where they could transform industria… ArticlePublished:4th May 2022President Biden will sign two Presidential directives that will advance national initiatives in quantum information science (QIS), signaling the Biden-Harris Administration’s commitment to this critical and emerging technology. ArticlePublished:7th Aug 2021The Economist offers authoritative insight and opinion on international news, politics, business, finance, science, technology and the connections between them. ArticlePublished:20th Apr 2022A programmable neutral-atom quantum computer based on a two-dimensional array of qubits led to the creation of 2–6-qubit Greenberger–Horne–Zeilinger states and showed the ability to execute quantum phase estimation and optimization algorithms. ArticlePublished:1st Jun 2022Gaussian boson sampling is performed on 216 squeezed modes entangled with three-dimensional connectivity5, using Borealis, registering events with up to 219 photons and a mean photon number of 125. ArticlePublished:9th Jun 2022The way we can tweak interactions between qubits in quantum computers means the machines could help us create novel structures with weird properties we have never seen in nature ArticlePublished:21st Apr 2022Experiments on how anaesthetics alter the behaviour of tiny structures found in brain cells bolster the controversial idea that quantum effects in the brain might explain consciousness ArticlePublished:14th Sep 2020Propelling the development of quantum technologies will require widespread literacy about quantum concepts, and a commitment to diversity as a source of competitive advantage. ArticlePublished:2nd May 2022Simulating a Quantum Future Quantum computers are anticipated to revolutionize the way researchers address complex computing problems. These computers are being developed to address major challenges in fundamental scientific fields such as quantum chemistry. ArticlePublished:26th May 2021Great leaps are already being made in creating a super secure quantum internet. It could overturn the role of information in our lives and give us a globe-spanning quantum supercomputer ArticlePublished:21st Jun 2022MIT researchers developed a method to enable quantum sensors to detect any arbitrary frequency, with no loss of their ability to measure nanometer-scale features. Quantum sensors detect the most minute variations in magnetic or electrical fields, but until now they have only been capable of detectin… ArticlePublished:23rd Jun 2022Quantum computing hardware specialists at UNSW have built a quantum processor in silicon to simulate an organic molecule with astounding precision. ArticlePublished:2nd May 2022An experiment demonstrates that quantum key distribution networks, which are part of highly secure cryptography schemes, can also detect and locate earthquakes. ArticlePublished:12th Oct 2020Quantum computing technologies are advancing, and the class of addressable problems is expanding. What market strategies are quantum computing companies and start-ups adopting? ArticlePublished:7th Aug 2021Photonics is the science of light generation, detection and manipulation and is used to develop technologies spanning sources and sensors to quantum computers. It provides the backbone of the internet and will be used in autonomous vehicles, health diagnosis and treatments, and the 5G network. ArticlePublished:7th Aug 2021In a world of exciting technological possibilities, among the most significant are those enabled by quantum physics. It is thanks to this field of research that we have the lasers used in precision manufacturing, the transistors on which today’s computers are based and solar cells to provide a clean alternative to fossil fuels. ArticlePublished:15th Sep 2022When quantum computers become powerful enough, they could theoretically crack the encryption algorithms that keep us safe. The race is on to find new ones. ArticlePublished:4th Apr 2022The road ahead: The diversity of quantum sensing applications is exciting for scientists with many possible applications, but the path to commercializing them may not be straightforward. | |||

1.2.1Quantum Communication | |||

ArticlePublished:25th May 2022Scientists have improved their ability to send quantum information across distant computers — and have taken another step toward the network of the future. ArticlePublished:4th May 2022President Biden will sign two Presidential directives that will advance national initiatives in quantum information science (QIS), signaling the Biden-Harris Administration’s commitment to this critical and emerging technology. ArticlePublished:26th May 2021Great leaps are already being made in creating a super secure quantum internet. It could overturn the role of information in our lives and give us a globe-spanning quantum supercomputer ArticlePublished:15th Sep 2022When quantum computers become powerful enough, they could theoretically crack the encryption algorithms that keep us safe. The race is on to find new ones. | |||

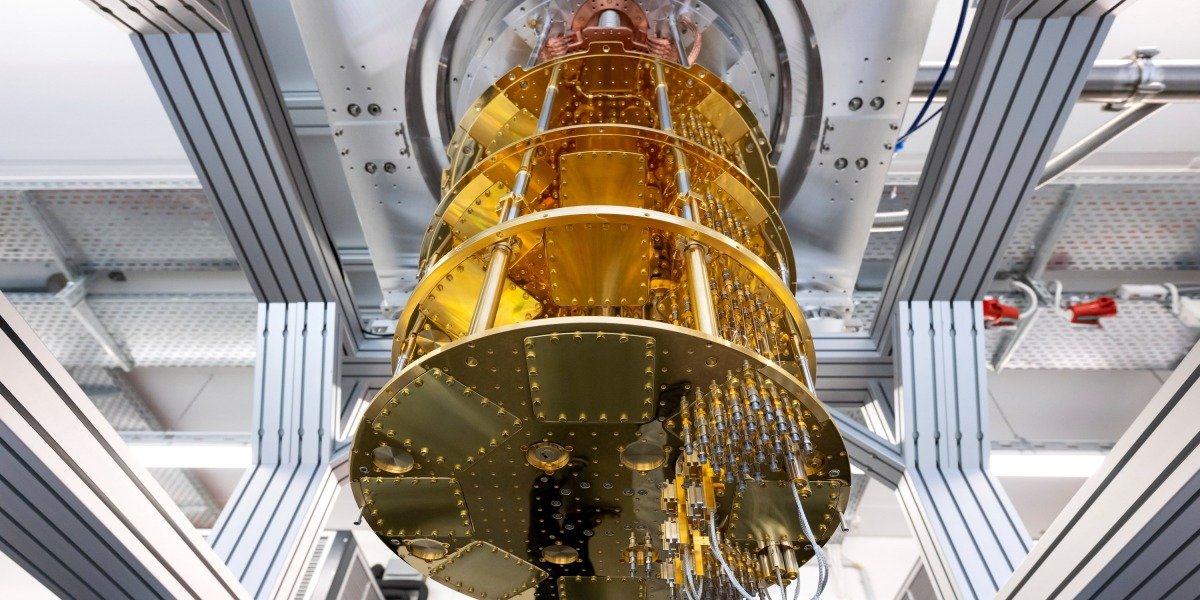

1.2.2Quantum Computing | |||

ArticlePublished:21st Sep 2022Scientists to develop a quantum computer dedicated to life sciences. Denmark's Novo Nordisk Foundation is to develop a practical quantum computer with applications ranging from creating new drugs to finding links between genes, environment and disease. ArticlePublished:20th Apr 2022A programmable neutral-atom quantum computer based on a two-dimensional array of qubits led to the creation of 2–6-qubit Greenberger–Horne–Zeilinger states and showed the ability to execute quantum phase estimation and optimization algorithms. ArticlePublished:9th Jun 2022The way we can tweak interactions between qubits in quantum computers means the machines could help us create novel structures with weird properties we have never seen in nature ArticlePublished:23rd Jun 2022Quantum computing hardware specialists at UNSW have built a quantum processor in silicon to simulate an organic molecule with astounding precision. | |||

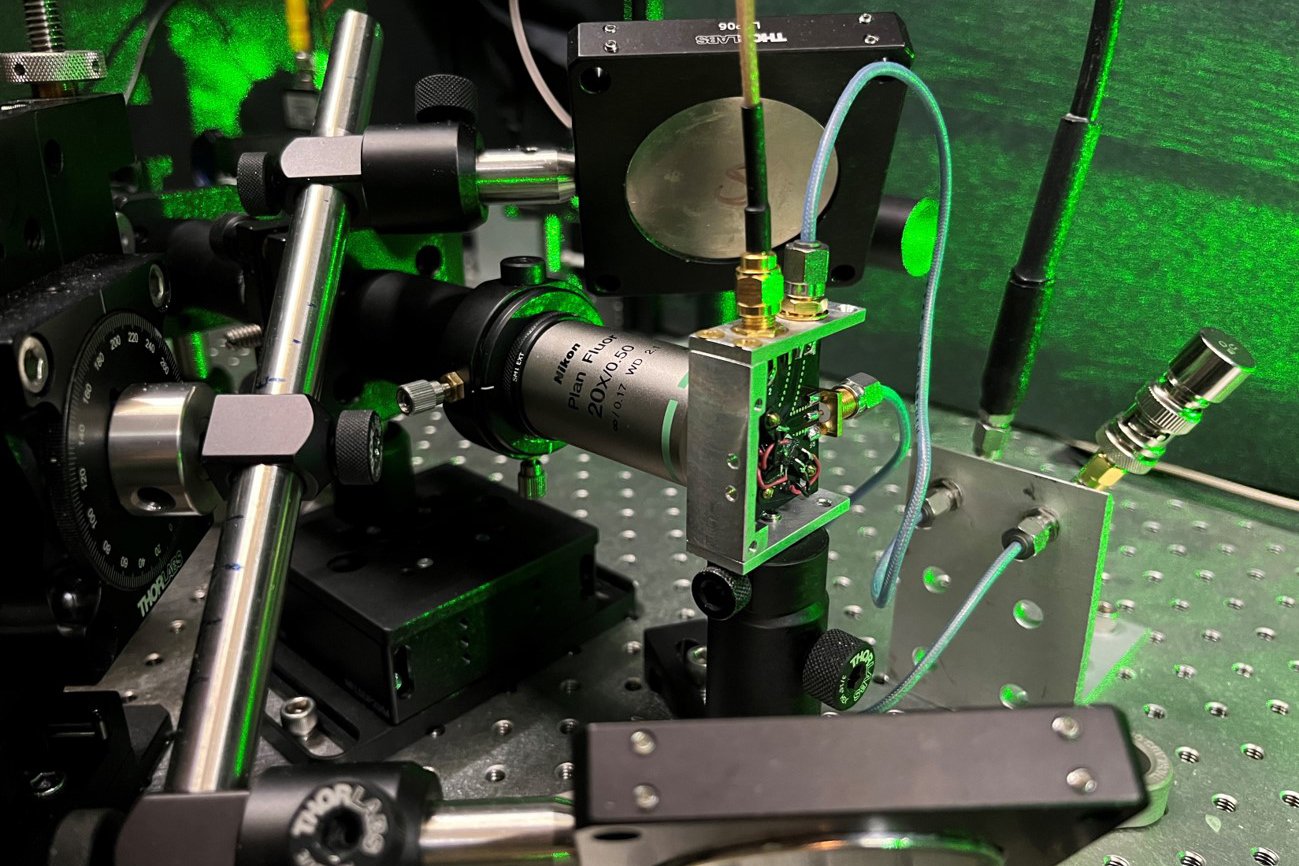

1.2.3Quantum Sensing and Imaging | |||

ArticlePublished:2nd May 2022Simulating a Quantum Future Quantum computers are anticipated to revolutionize the way researchers address complex computing problems. These computers are being developed to address major challenges in fundamental scientific fields such as quantum chemistry. ArticlePublished:21st Jun 2022MIT researchers developed a method to enable quantum sensors to detect any arbitrary frequency, with no loss of their ability to measure nanometer-scale features. Quantum sensors detect the most minute variations in magnetic or electrical fields, but until now they have only been capable of detectin… ArticlePublished:2nd May 2022An experiment demonstrates that quantum key distribution networks, which are part of highly secure cryptography schemes, can also detect and locate earthquakes. ArticlePublished:4th Apr 2022The road ahead: The diversity of quantum sensing applications is exciting for scientists with many possible applications, but the path to commercializing them may not be straightforward. | |||

1.2.4Quantum Foundations | |||

ArticlePublished:4th Jun 2022Quantum computing is very susceptible to noise and disruptive influences in the environment. This makes quantum computers “noisy,” since quantum bits, or qubits, lose information when they go out of sync, a process known as decoherence. ArticlePublished:1st Jun 2022In this podcast we speak to a molecular geneticist and a philosopher of science ArticlePublished:1st Jun 2022Gaussian boson sampling is performed on 216 squeezed modes entangled with three-dimensional connectivity5, using Borealis, registering events with up to 219 photons and a mean photon number of 125. ArticlePublished:21st Apr 2022Experiments on how anaesthetics alter the behaviour of tiny structures found in brain cells bolster the controversial idea that quantum effects in the brain might explain consciousness | |||

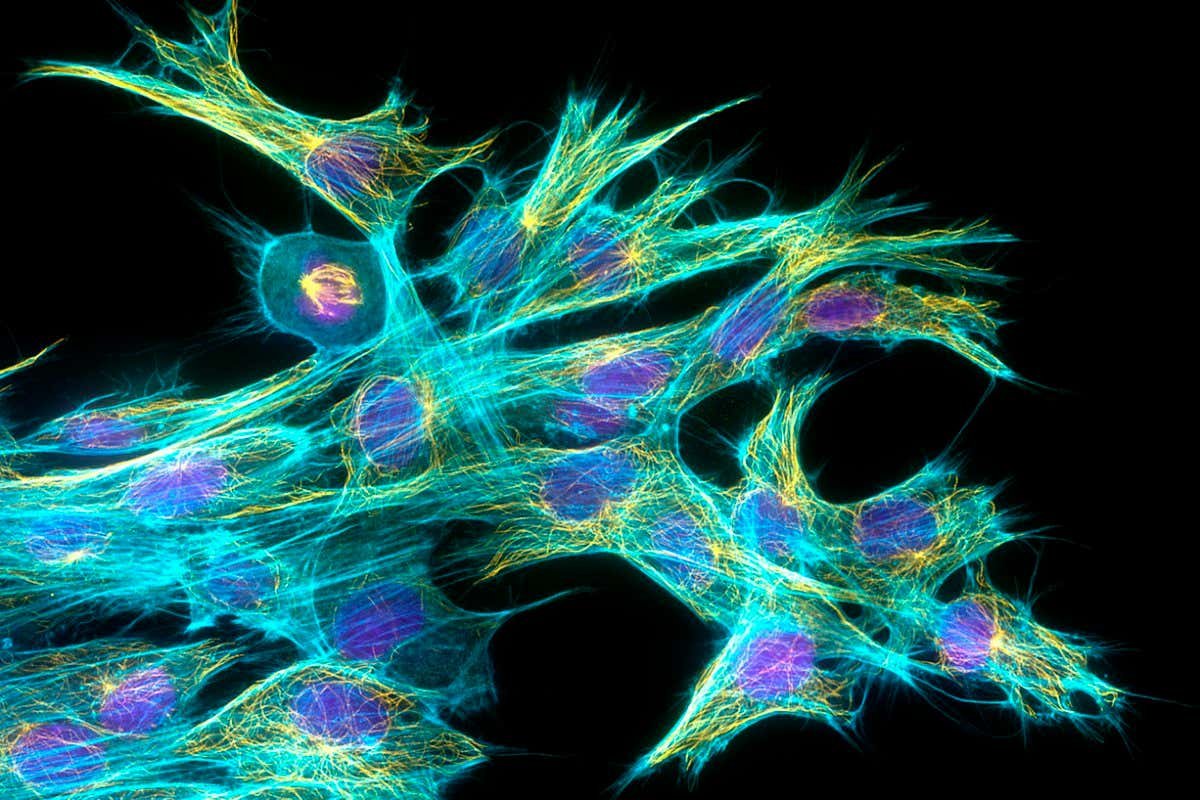

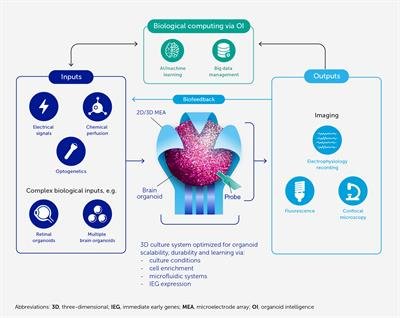

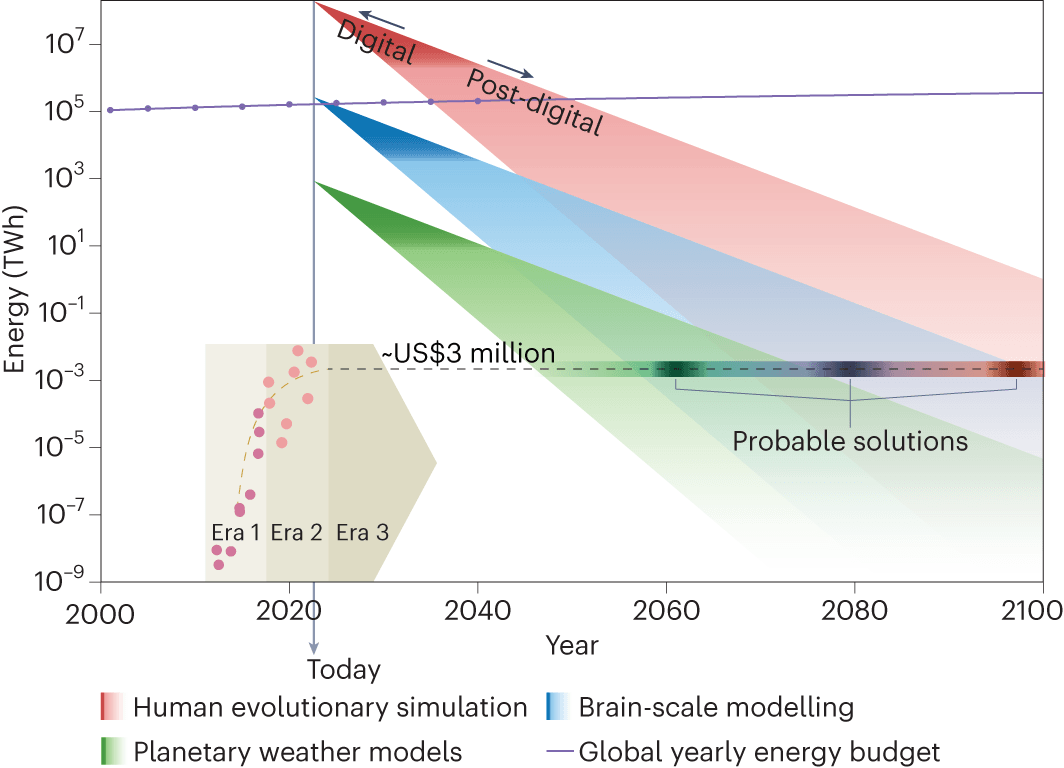

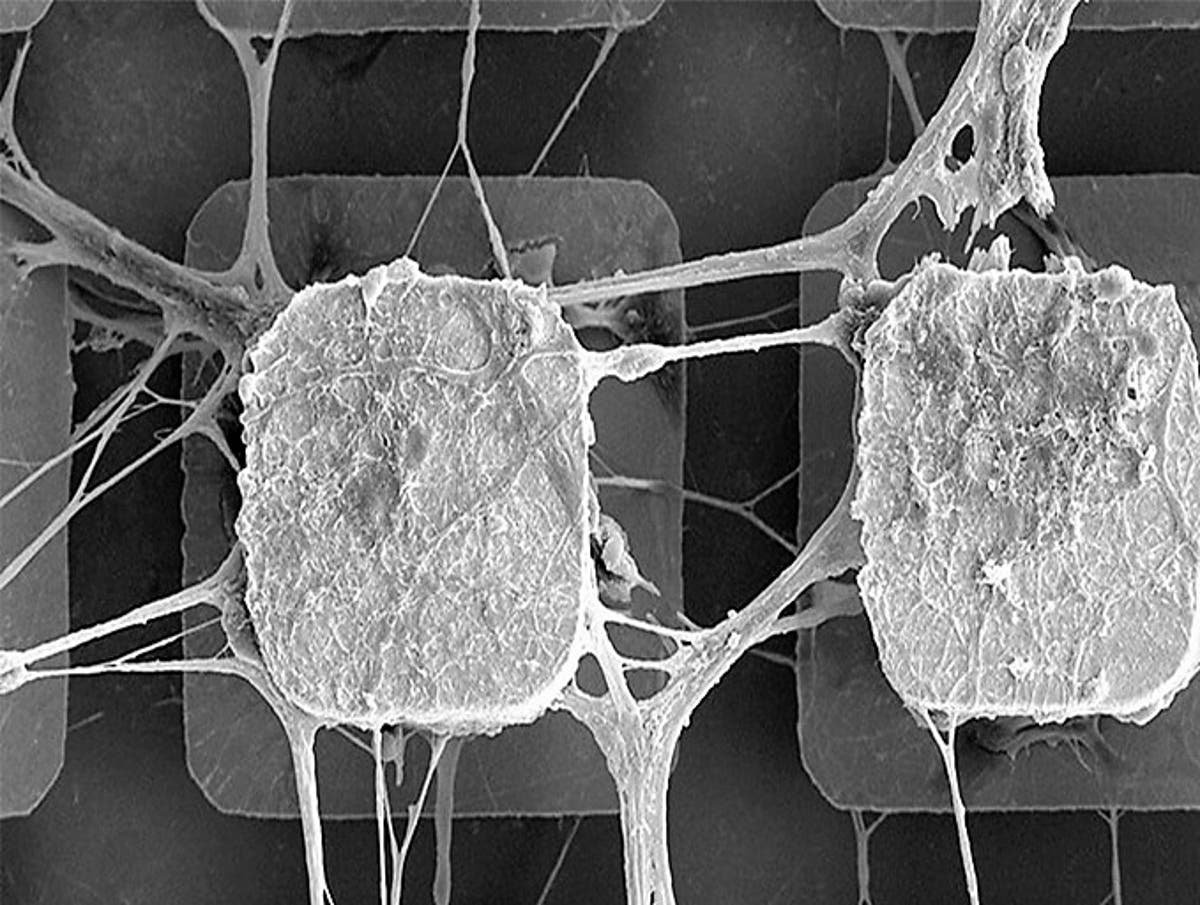

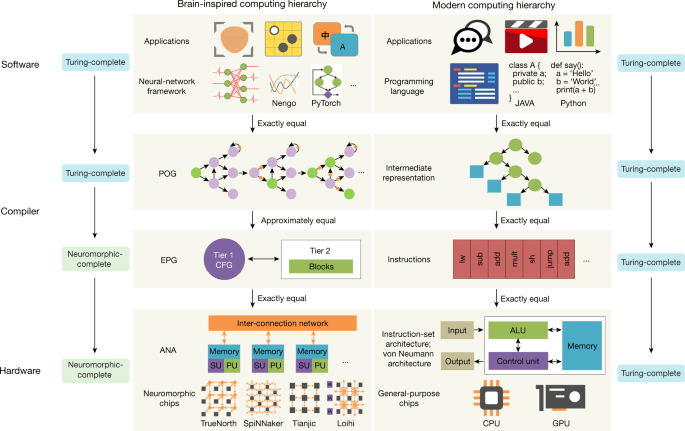

ArticlePublished:7th Aug 2021Beyond imitation: Zero-shot task transfer on robots by learning concepts as cognitive programs, January 2019, Science Robotics ArticlePublished:13th Apr 2022New computing technologies inspired by the brain promise fundamentally different ways to process information with extreme energy efficiency and the ability to handle the avalanche of unstructured and noisy data that we are generating at an ever-increasing rate. ArticlePublished:23rd Jun 2022In December 2021, Melbourne-based Cortical Labs grew groups of neurons (brain cells) that were incorporated into a computer chip. ArticlePublished:25th Jan 2021Deep neural networks, often criticized as “black boxes,” are helping neuroscientists understand the organization of living brains. ArticlePublished:13th Jun 2022MIT engineers built a LEGO-like artificial intelligence chip, with a view toward sustainable, modular electronics. The chip can be reconfigured, with layers that can be swapped out or stacked on, such as to add new sensors or updated processors. ArticlePublished:11th Aug 2022Biological computing (or biocomputing) offers potential advantages over silicon-based computing in terms of faster decision-making, continuous learning durin... ArticlePublished:3rd Jun 2021Scientists at the Jawaharlal Nehru Centre for Advanced Scientific Research in Bengaluru, an autonomous institute of the Indian government’s Department of Science and Technology, developed a new approach for fabricating an artificial synaptic network (ASN) that resemble the biological neural network … ArticlePublished:24th May 2022Tthe next generation of AI may be 1000 times more energy efficient, thanks to computer chips that work like the human brain. A new study shows such neuromorphic chips can run AI algorithms using just a fraction of the energy consumed by ordinary chips. ArticlePublished:6th May 2022Researchers in Italy and Austria have constructed a new device that can transmit coherent quantum information as a superposition of single photons. It could be used to fabricate architectures that mimic how the brain works. ArticlePublished:26th Sep 2023Cellular computing is promising and exciting domain. As the field continues to evolve, several future facets are expected to emerge. One potential facet is the development of more sophisticated and efficient cellular-based computing systems that can perform complex calculations and data processing t… ArticlePublished:20th Jul 2023Nature Electronics - Substantial improvements in computing energy efficiency, by up to ten orders of magnitude, will be required to solve major computing problems — such as planetary-scale... ArticlePublished:1st Mar 2023 The report highlights some of our most interesting observations, sketches connections and patterns, and asks what these might mean for the future of development. ArticlePublished:12th May 2021Brain development could hold lessons for building better artificial neural networks | |||

1.3.1Neuromorphic Computing | |||

ArticlePublished:21st Jan 2021MIT researchers have developed an automated way to design customized hardware that speeds up a robot’s operation. The system, called robomorphic computing, accounts for the robot’s physical layout in suggesting an optimized hardware architecture. ArticlePublished:3rd Feb 2021‘Our aim is to harness the unrivalled computing power of the human brain to dramatically increase the ability of computers,’ professor says ArticlePublished:3rd Jun 2021Scientists at the Jawaharlal Nehru Centre for Advanced Scientific Research in Bengaluru, an autonomous institute of the Indian government’s Department of Science and Technology, developed a new approach for fabricating an artificial synaptic network (ASN) that resemble the biological neural network … ArticlePublished:24th May 2022Tthe next generation of AI may be 1000 times more energy efficient, thanks to computer chips that work like the human brain. A new study shows such neuromorphic chips can run AI algorithms using just a fraction of the energy consumed by ordinary chips. | |||

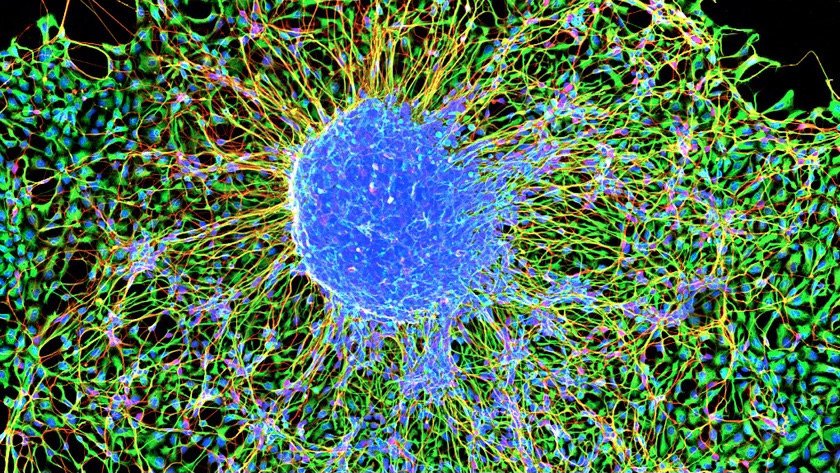

1.3.2Organoid Intelligence | |||

ArticlePublished:14th Oct 2020The concept of neuromorphic completeness and a system hierarchy for neuromorphic computing are presented, which could improve programming-language portability, hardware completeness and compilation feasibility of brain-inspired computing systems | |||

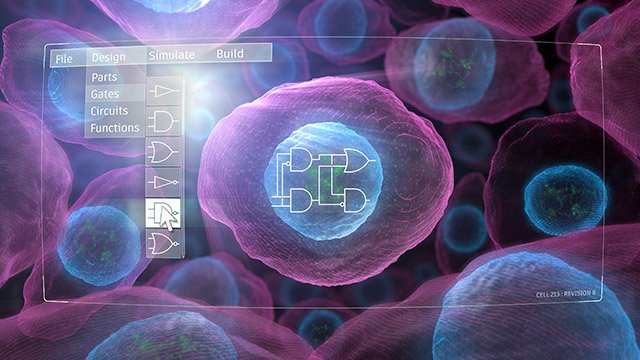

1.3.3Cellular Computing | |||

ArticlePublished:13th Apr 2022New computing technologies inspired by the brain promise fundamentally different ways to process information with extreme energy efficiency and the ability to handle the avalanche of unstructured and noisy data that we are generating at an ever-increasing rate. ArticlePublished:25th Jan 2021Deep neural networks, often criticized as “black boxes,” are helping neuroscientists understand the organization of living brains. ArticlePublished:13th Jun 2022MIT engineers built a LEGO-like artificial intelligence chip, with a view toward sustainable, modular electronics. The chip can be reconfigured, with layers that can be swapped out or stacked on, such as to add new sensors or updated processors. | |||

1.3.4Optical Computing | |||

ArticlePublished:24th Jun 2021One researcher proposes a new way to stimulate visual neurons in the brain, with inspiration from human evolution ArticlePublished:6th May 2022Researchers in Italy and Austria have constructed a new device that can transmit coherent quantum information as a superposition of single photons. It could be used to fabricate architectures that mimic how the brain works. | |||

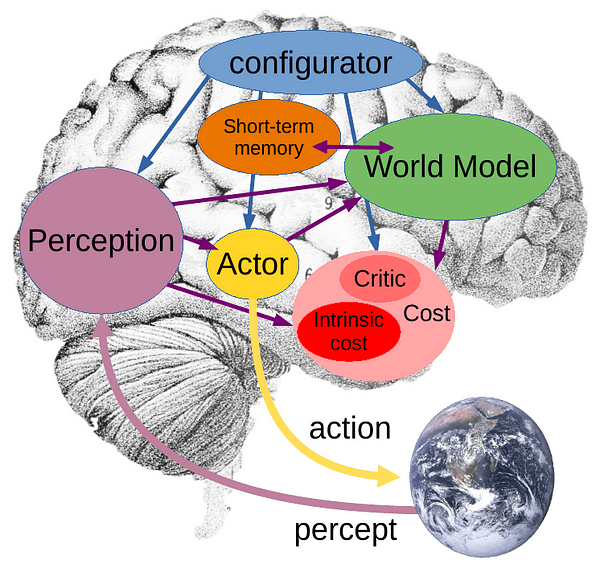

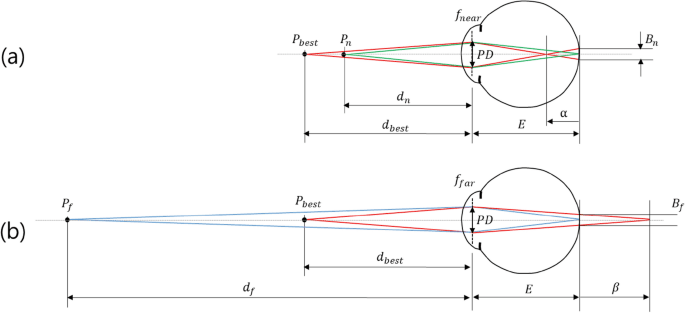

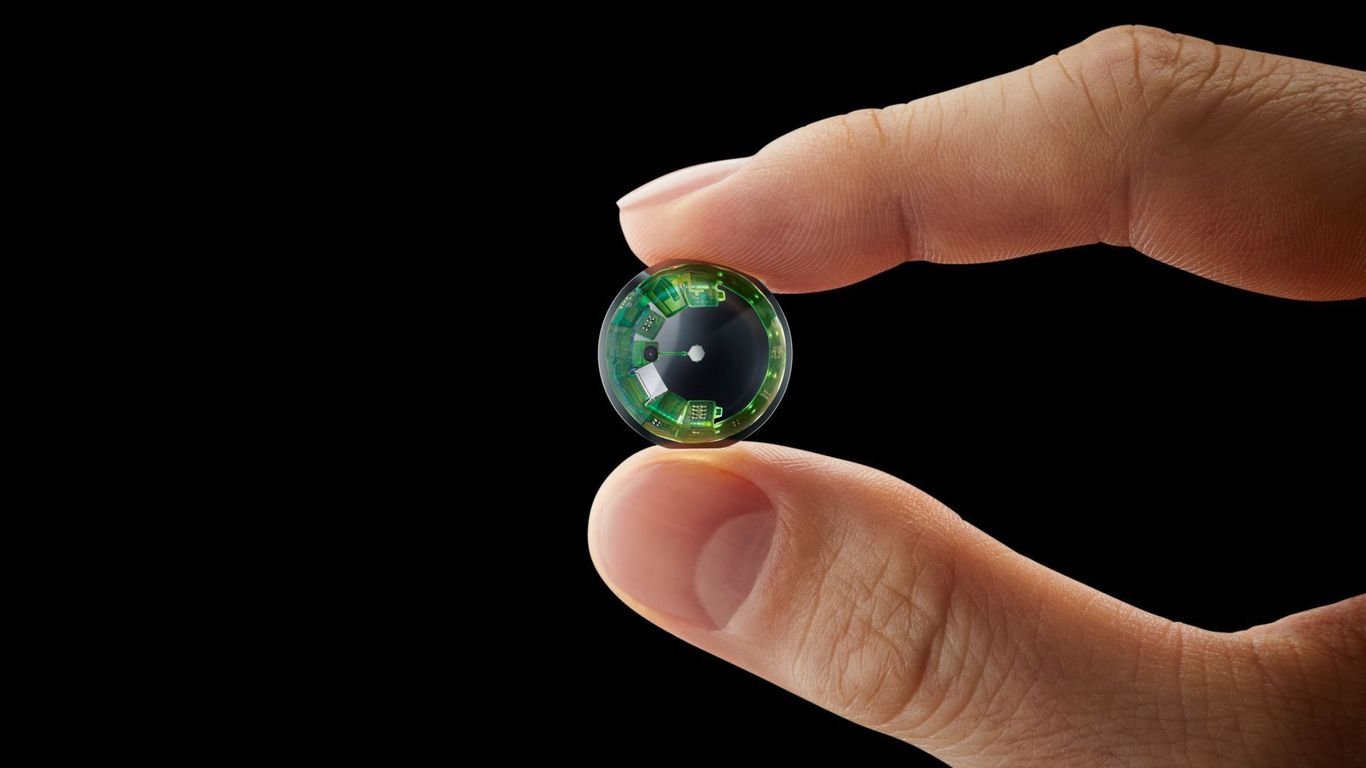

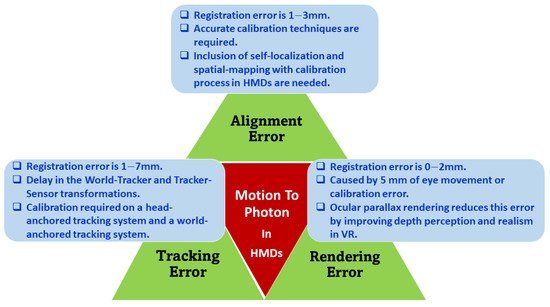

ArticlePublished:2nd Sep 2022A new class of machine, because it grasps the symbols in language, music and programming and uses them in ways that seem creative. A bit like a human. The promise and perils of a breakthrough in machine intelligence. ArticlePublished:13th Apr 2022Review of The Warehouse: A Novel by Rob Hart. A satirical tale from 2019 of a near future dominated by a single power-hungry firm is gripping and cerebral. ArticlePublished:22nd Sep 2022After decades of relative dormancy, augmented and virtual reality (AR and VR) are among the fastest developing consumer product technologies today. Scientists are exploring new material designs to make smaller and denser pixel displays. ArticlePublished:12th Apr 2022Professor of Philosophy and Neural Science, Dr. David Chalmers, about his book Reality+: Virtual Worlds and the Problems of Philosophy. ArticlePublished:31st May 2023Scientific Reports - Extended depth of field in augmented reality ArticlePublished:24th Jun 2022Analyst Ming-Chi Kuo predicts that Apple’s entry into the space will be a catalyst for the growth of virtual and augmented reality, and dubs the company a “game-changer for the headset industry.” ArticlePublished:30th Mar 2022A startup’s prototype shows how augmented reality vision could come to the surface of your eyeballs. ArticlePublished:30th Jun 2022Experts are split about the likely evolution of a truly immersive “metaverse.” They expect augmented- and mixed-reality enhancements will become more useful in people’s daily lives. Many worry that current online problems may be magnified. ArticlePublished:26th Sep 2023ArticlePublished:2nd Apr 2023Augmented reality and virtual reality technologies are witnessing an evolutionary change in the 5G and Beyond (5GB) network due to their promising ability to enable an immersive and interactive environment by coupling the virtual world with the real one. However, the requirement of low-latency conne… | |||

1.4.1Augmented reality hardware | |||

ArticlePublished:24th Jun 2022Analyst Ming-Chi Kuo predicts that Apple’s entry into the space will be a catalyst for the growth of virtual and augmented reality, and dubs the company a “game-changer for the headset industry.” | |||

1.4.2Augmented experiences | |||

ArticlePublished:22nd Sep 2022After decades of relative dormancy, augmented and virtual reality (AR and VR) are among the fastest developing consumer product technologies today. Scientists are exploring new material designs to make smaller and denser pixel displays. ArticlePublished:30th Mar 2022A startup’s prototype shows how augmented reality vision could come to the surface of your eyeballs. | |||

1.4.3AR platforms | |||

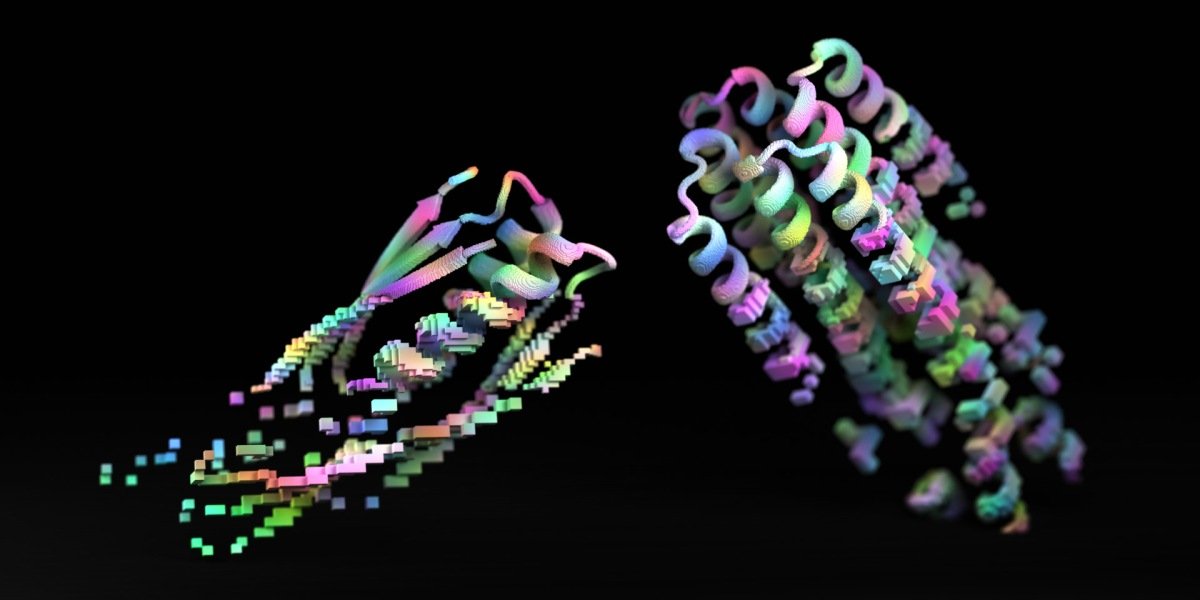

ArticlePublished:20th Sep 2022A new AI tool could help researchers discover previously unknown proteins and design entirely new ones. When harnessed, it could help unlock the development of more efficient vaccines, speed up research for the cure to cancer, or lead to completely new materials. ArticlePublished:13th Apr 2022Review of The Warehouse: A Novel by Rob Hart. A satirical tale from 2019 of a near future dominated by a single power-hungry firm is gripping and cerebral. ArticlePublished:30th Jun 2022Experts are split about the likely evolution of a truly immersive “metaverse.” They expect augmented- and mixed-reality enhancements will become more useful in people’s daily lives. Many worry that current online problems may be magnified. | |||

1.4.4Human factors of AR | |||

ArticlePublished:12th Apr 2022Professor of Philosophy and Neural Science, Dr. David Chalmers, about his book Reality+: Virtual Worlds and the Problems of Philosophy. | |||

ArticlePublished:20th Sep 2022A new AI tool could help researchers discover previously unknown proteins and design entirely new ones. When harnessed, it could help unlock the development of more efficient vaccines, speed up research for the cure to cancer, or lead to completely new materials. ArticlePublished:26th Oct 2022Augmented collective intelligence. Human-AI networks in the future or work. A playbook for designing and managing a collective intelligence: a supermind. ArticlePublished:26th Sep 2023ArticlePublished:30th May 2022Artificial intelligence and smart robots are becoming increasingly capable in the workplace. Here we look at what this means for humans and how creating the right blended workforce for the future is key to success. ArticlePublished:10th Aug 2022Each of the paradigms of thinking in the tech industry that got us where we are should be overhauled by a human-centered focus. ArticlePublished:1st Mar 2023 The report highlights some of our most interesting observations, sketches connections and patterns, and asks what these might mean for the future of development. ArticlePublished:7th Feb 2022When we rely on machines to make decisions, we substitute data-driven calculations for human judgment. A closer look at how we are using AI systems today suggests that we may be wrong in assuming that their growing power is mostly for the good. | |||

1.5.1Large-scale collaboration | |||

ArticlePublished:10th Aug 2022Each of the paradigms of thinking in the tech industry that got us where we are should be overhauled by a human-centered focus. | |||

1.5.2Smarter teams | |||

ArticlePublished:26th Oct 2022Augmented collective intelligence. Human-AI networks in the future or work. A playbook for designing and managing a collective intelligence: a supermind. ArticlePublished:7th Feb 2022When we rely on machines to make decisions, we substitute data-driven calculations for human judgment. A closer look at how we are using AI systems today suggests that we may be wrong in assuming that their growing power is mostly for the good. | |||

1.5.3Collective cognition | |||

ArticlePublished:30th May 2022Artificial intelligence and smart robots are becoming increasingly capable in the workplace. Here we look at what this means for humans and how creating the right blended workforce for the future is key to success. | |||

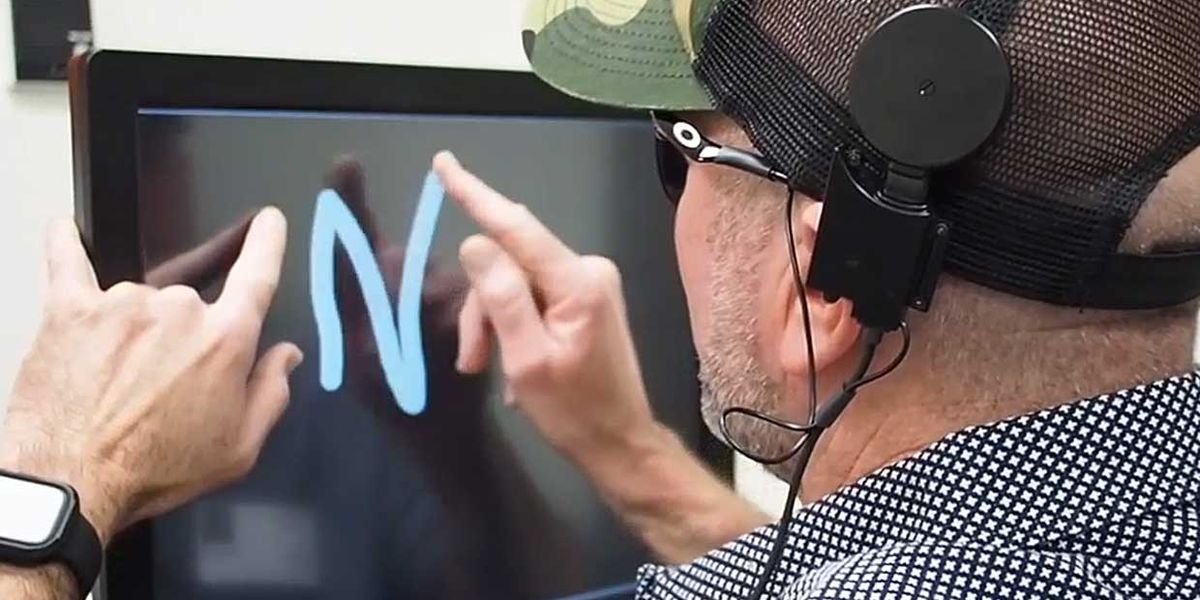

1.5.4Human-computer interaction | |||

ArticlePublished:2nd Sep 2022A new class of machine, because it grasps the symbols in language, music and programming and uses them in ways that seem creative. A bit like a human. The promise and perils of a breakthrough in machine intelligence. ArticlePublished:12th May 2022Researchers have created autonomous particles covered with patches of protein “motors.” They hope these bots will tote lifesaving drugs through bodily fluids. | |||

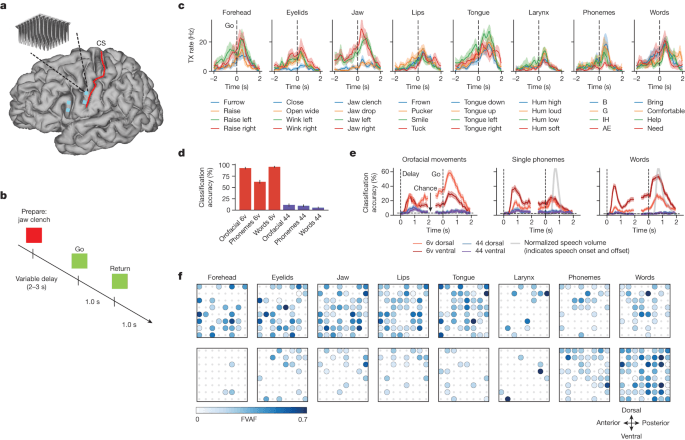

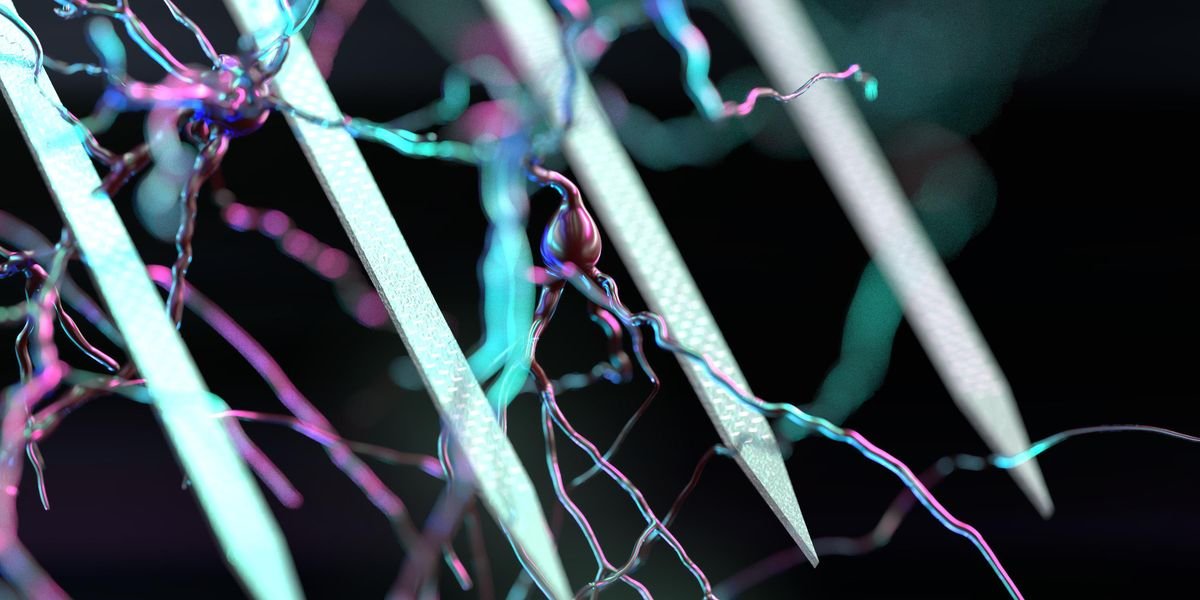

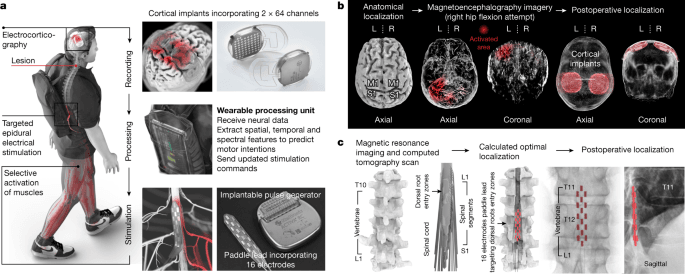

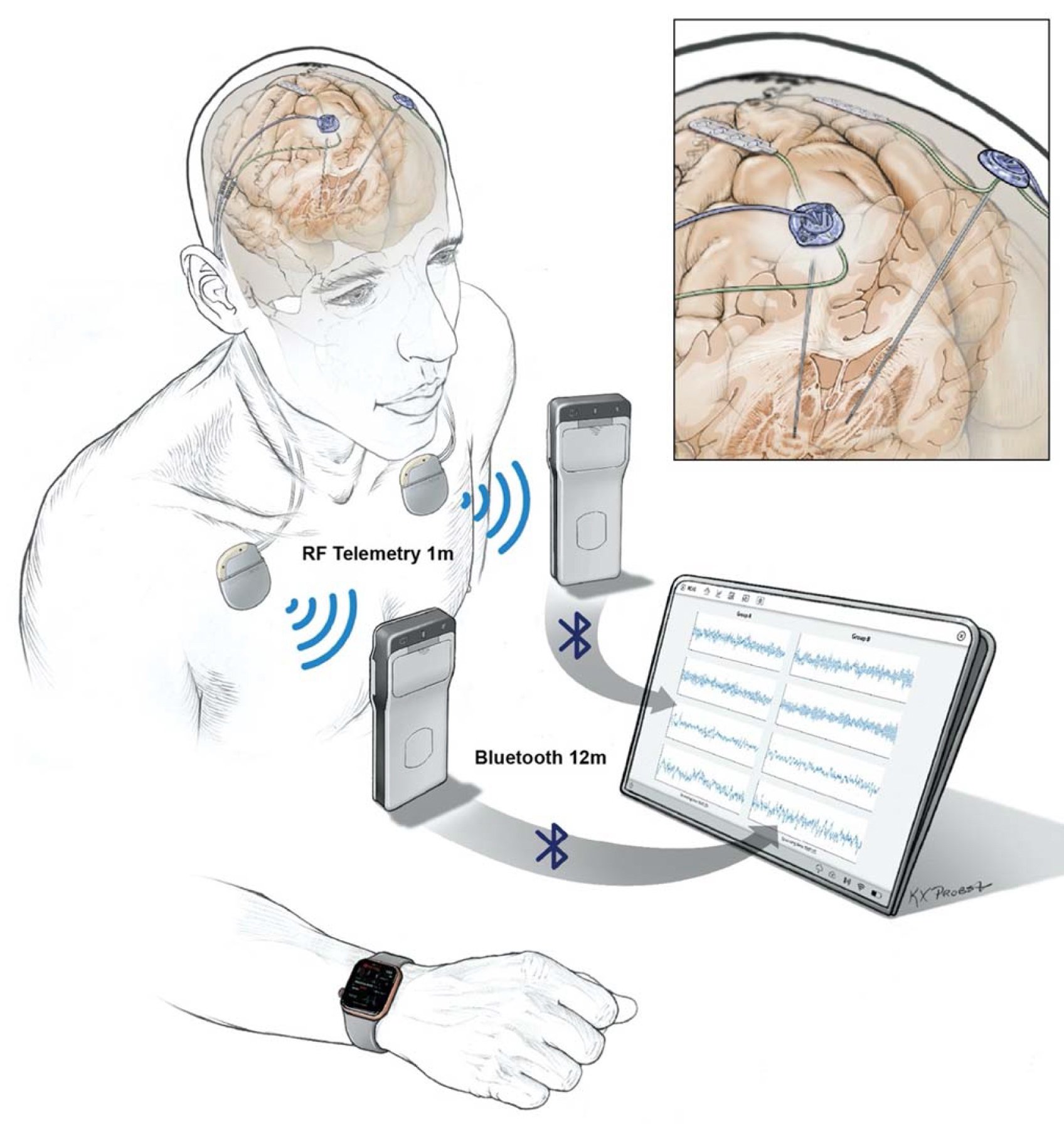

ArticlePublished:23rd Aug 2023Nature - A study using high-density surface recordings of the speech cortex in a person with limb and vocal paralysis demonstrates real-time decoding of brain activity into text, speech sounds and... ArticlePublished:23rd Aug 2023A speech-to-text brain–computer interface that records spiking activity from intracortical microelectrode arrays enabled an individual who cannot speak intelligibly to achieve 9.1 and 23.8% word error rates on a 50- and 125,000-word vocabulary, respectively. ArticlePublished:7th Sep 2022Brain electrodes designed to mimic the hippocampus appear to boost the encoding of memories—and are twice as effective in people with poor memory. ArticlePublished:6th Apr 2022MRI data from more than 100 studies have been aggregated to yield new insights about brain development and ageing, and create an interactive open resource for comparison of brain structures throughout the human lifespan, including those associated with neurological and psychiatric disorders. ArticlePublished:6th Jul 2022Electrodes implanted in the brain were known to release impulses that may “normalise” overactive connections within a specific circuit of the organ, but researchers were previously unsure whether the treatment offered long-term relief from severe depression ArticlePublished:23rd Sep 2020Elon Musk’s brain implants may sound like science fiction, but this future may be closer than you think. The OECD Neurotechnology Recommendation aims to ensure that the technology is developed in a responsible way, while still supporting innovation. ArticlePublished:24th Jan 2019The field of brain–computer interfaces (BCIs) has grown rapidly in the last few decades, allowing the development of ever faster and more reliable assistive technologies for converting brain activity into control signals for external devices for people with severe disabilities [...] ArticlePublished:17th Mar 2022Most studies linking features in brain imaging to traits such as cognitive abilities are too small to be reliable, argues a controversial analysis. ArticlePublished:13th Jul 2022Imec’s semiconductor fab brings exponential growth to neurotech. Version 2.0 of the system, demonstrated last year, increases the sensor count by about an order of magnitude over that of the initial version produced just four years earlier. ArticlePublished:22nd Jun 2022Noninvasive brain stimulation directly stimulates or inhibits neurons in specific brain regions in an effort to remodel circuits of interconnected cells. It has shown benefits for depression and in stroke rehabilitation, where it can help improve movement and speech. ArticlePublished:3rd Sep 2020A pig now has one of his implants in its brain | Science & technology ArticlePublished:9th Nov 2017Artificial intelligence and brain–computer interfaces must respect and preserve people’s privacy, identity, agency and equality, say Rafael Yuste, Sara Goering and colleagues. ArticlePublished:6th Feb 2022Powerful tricks from computer science and cybernetics show how evolution ‘hacked’ its way to intelligence from the bottom up. ArticlePublished:7th Aug 2021Neural interfaces, brain-computer interfaces and other devices that blur the lines between mind and machine have extraordinary potential. These technologies could transform medicine and fundamentally change how we interact with technology and each other. At the same time, neural interfaces raise critical ethical concerns over issues such as privacy, autonomy, human rights and equality of access. This Royal Society Perspective takes a future-facing look into possible applications of neural and brain-computer interfaces, exploring the potential benefits and risks of the technologies and setting out a course towards maximising the former and minimising the latter. ArticlePublished:23rd May 2022International Human Rights Protection Gaps in the Age of Neurotechnology is the first comprehensive review of international human rights law as applied to neurotechnology. ArticlePublished:14th Jun 2021What if you could knock someone off merely by thinking about it – and no way to trace those thoughts to the crime? And more horrendous still: what if you could harm someone because of some subconscious desire, one that you weren’t even aware of? This isn’t the stuff of science fiction. It might even… ArticlePublished:15th Nov 2018Recent advances in neuroscience have paved the way to innovative applications that cognitively augment and enhance humans in a variety of contexts. This paper aims at providing a snapshot of the current state of the art and a motivated forecast of the most likely developments in the next two decades… ArticlePublished:7th Aug 2021Data, research and country reviews on innovation including innovation in science and technology, research and knowledge management, public sector innovation and e-government., This Recommendation aims to provide guidance for governments and innovators to anticipate and address the ethical, legal and… ArticlePublished:21st Dec 2019Cognitive enhancement, a rather broad-ranging principle, can be achieved in various ways: healthy eating and consistent physical exercise can lead to long-term improvements in many cognitive domains; commonplace stimulants such as caffeine, on the other hand, temporarily raise levels of alertness, a… ArticlePublished:1st Jan 2021Advancements in novel neurotechnologies, such as brain computer interfaces (BCI) and neuromodulatory devices such as deep brain stimulators (DBS), will have profound implications for society and human rights. While these technologies are improving the diagnosis and treatment of mental and neurologic… ArticlePublished:12th Apr 2021Two patients who had been paralyzed by spinal cord injuries were able to use the brain-computer interface to control a computer cursor in their homes. ArticlePublished:22nd Sep 2022Memory retention gets worse with age. For people with brain injuries, such as from a stroke or physical trauma to the brain, the impairment can be utterly debilitating. The central idea is simple: replicate the hippocampus’ signals with a digital replacement. It’s no easy task. ArticlePublished:10th May 2022Brain-computer interfaces have the potential to eventually help restore functionality to those with paralysis and other conditions. A new clinical trial seeks to help patients with severe paralysis. ArticlePublished:20th Apr 2022An estimated 35 people have had a BCI implanted long-term in their brain. Only around a dozen laboratories conduct such research, but that number is growing. Implants are becoming more sophisticated — and are attracting commercial interest. ArticlePublished:3rd May 2022Nature Reviews Neuroscience - Various theories have been developed for the biological and physical basis of consciousness. In this Review, Anil Seth and Tim Bayne discuss four prominent theoretical... ArticlePublished:3rd Jun 2022The increasing availability of brain data within and outside the biomedical field, combined with the application of artificial intelligence (AI) to brain data analysis, poses a challenge for ethics and governance. ArticlePublished:24th May 2023A reliable digital bridge restored communication between the brain and spinal cord and enabled natural walking in a participant with spinal cord injury. | |||

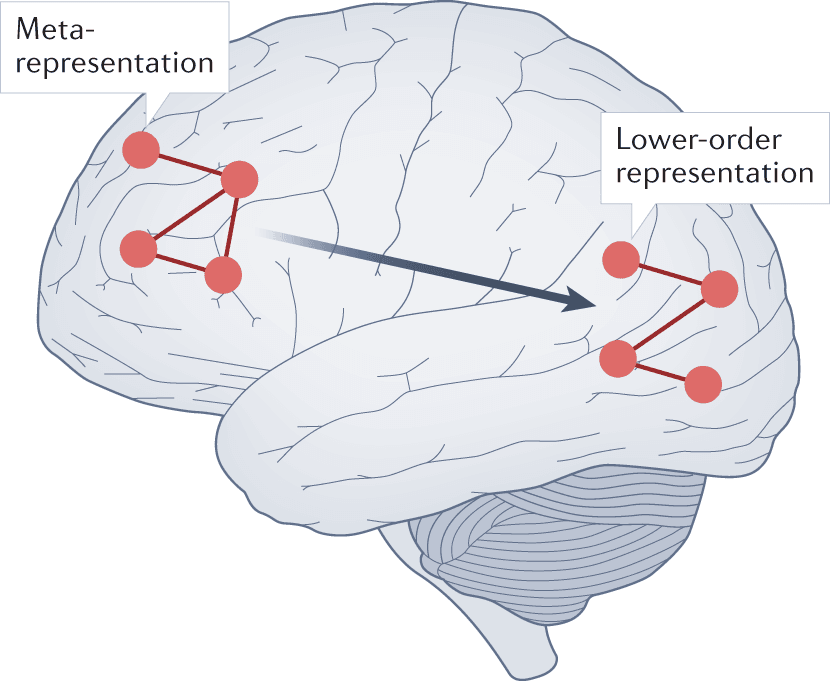

2.1.1Fundamentals of cognition | |||

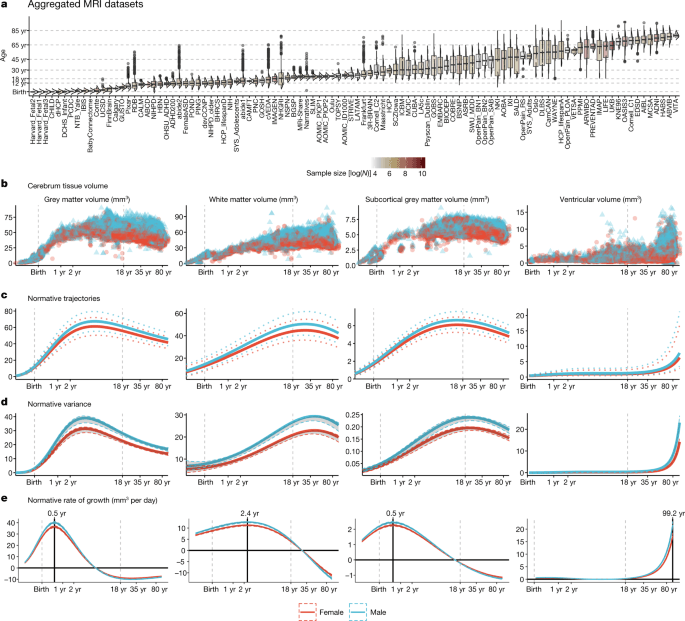

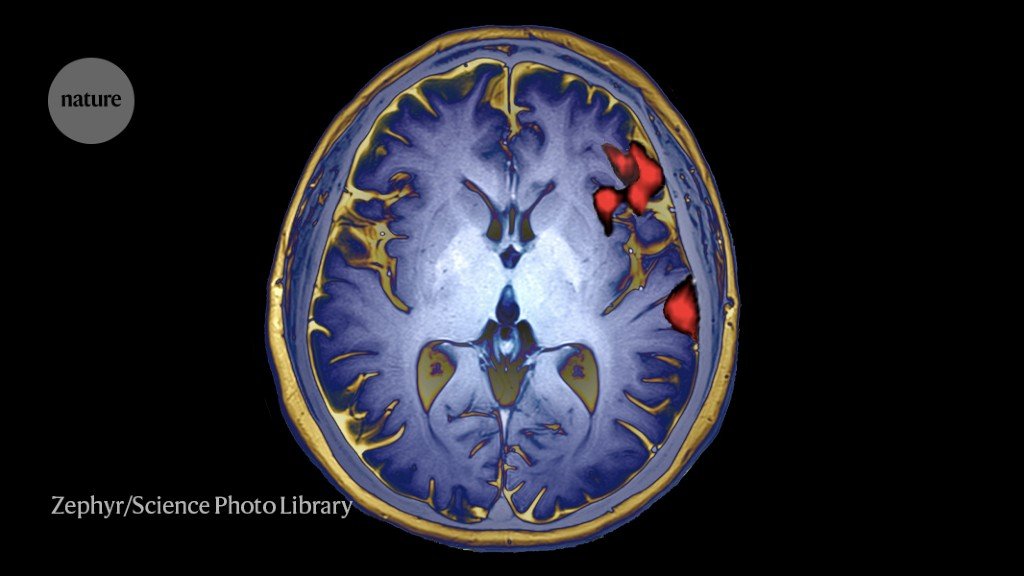

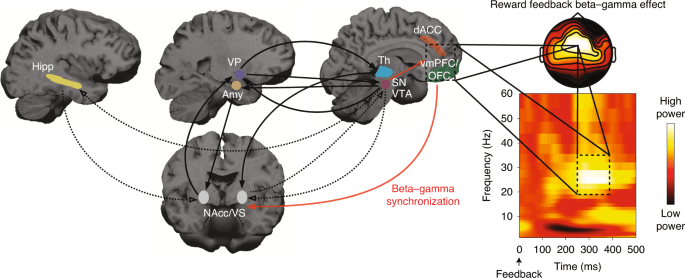

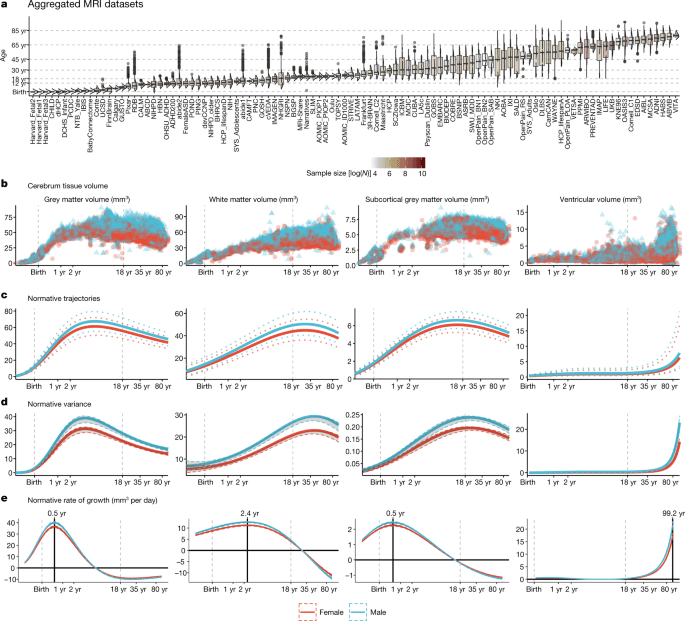

ArticlePublished:6th Apr 2022MRI data from more than 100 studies have been aggregated to yield new insights about brain development and ageing, and create an interactive open resource for comparison of brain structures throughout the human lifespan, including those associated with neurological and psychiatric disorders. ArticlePublished:6th Jul 2022Electrodes implanted in the brain were known to release impulses that may “normalise” overactive connections within a specific circuit of the organ, but researchers were previously unsure whether the treatment offered long-term relief from severe depression ArticlePublished:17th Mar 2022Most studies linking features in brain imaging to traits such as cognitive abilities are too small to be reliable, argues a controversial analysis. ArticlePublished:7th Jun 2021A research team from the University of Copenhagen and University of Helsinki demonstrates it is possible to predict individual preferences based on how a person’s brain responses match up to others. This could potentially be used to provide individually-tailored media content—and perhaps even to enl… ArticlePublished:13th Jul 2022Imec’s semiconductor fab brings exponential growth to neurotech. Version 2.0 of the system, demonstrated last year, increases the sensor count by about an order of magnitude over that of the initial version produced just four years earlier. ArticlePublished:23rd May 2022International Human Rights Protection Gaps in the Age of Neurotechnology is the first comprehensive review of international human rights law as applied to neurotechnology. ArticlePublished:10th Apr 2021BCI technology has emerged as a major area of scientific research and increasingly consumer technology. ArticlePublished:3rd May 2021NIH BRAIN Initiative-funded study opens the door to correlating deep brain activity and behavior. ArticlePublished:3rd Jun 2022The increasing availability of brain data within and outside the biomedical field, combined with the application of artificial intelligence (AI) to brain data analysis, poses a challenge for ethics and governance. | |||

2.1.2Brain monitoring | |||

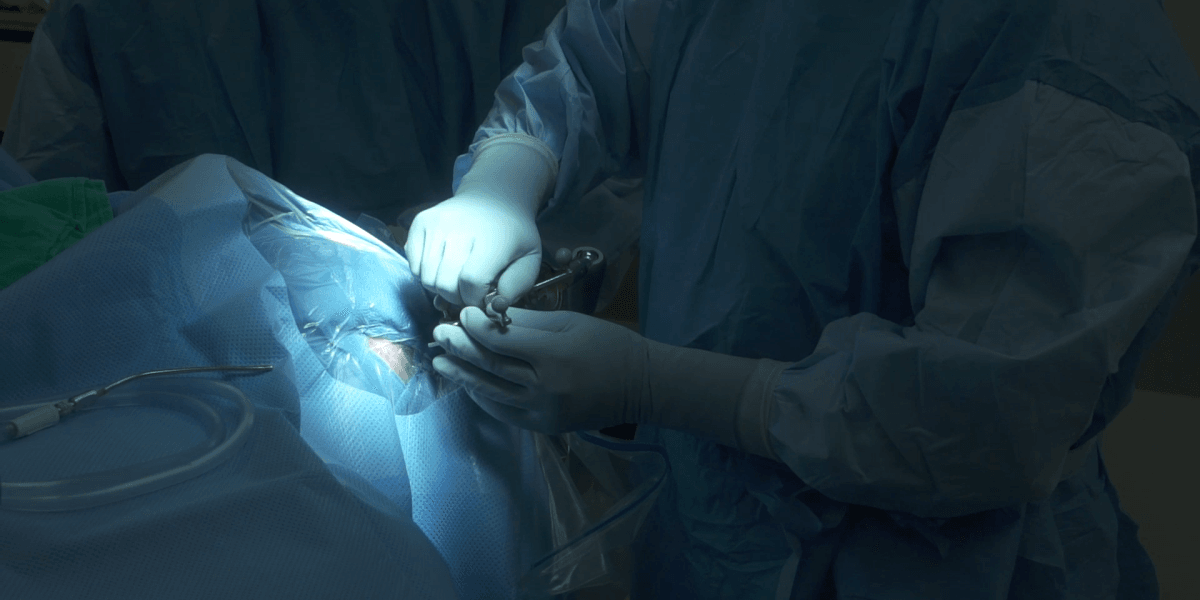

ArticlePublished:22nd Jun 2022Noninvasive brain stimulation directly stimulates or inhibits neurons in specific brain regions in an effort to remodel circuits of interconnected cells. It has shown benefits for depression and in stroke rehabilitation, where it can help improve movement and speech. ArticlePublished:18th Jan 2021Selective and personalized neuromodulation of orbitofrontal beta–gamma rhythms in humans, achieved with an alternating current, robustly attenuates obsessive–compulsive behavior for 3 months. ArticlePublished:21st Jun 2021A computerized brain implant effectively relieves short-term and chronic pain in rodents, a new study finds. ArticlePublished:25th May 2021The study, a tour de force in bioengineering, comes after two decades of research on brain-to-brain synchrony in people. ArticlePublished:10th May 2022Brain-computer interfaces have the potential to eventually help restore functionality to those with paralysis and other conditions. A new clinical trial seeks to help patients with severe paralysis. ArticlePublished:20th Apr 2022An estimated 35 people have had a BCI implanted long-term in their brain. Only around a dozen laboratories conduct such research, but that number is growing. Implants are becoming more sophisticated — and are attracting commercial interest. | |||

2.1.3Neuromodulation systems | |||

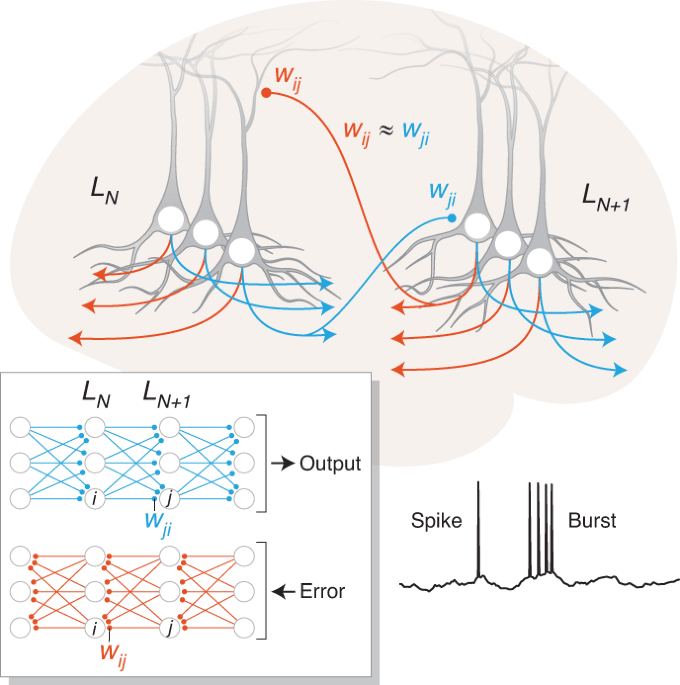

ArticlePublished:13th May 2021For decades, researchers have wondered whether algorithms used by artificial neural networks might be implemented by biological networks. Payeur et al. have strengthened the connection between neuroscience and artificial intelligence by showing that biologically plausible mechanisms can approximate … ArticlePublished:21st Feb 2022Harvard researchers used lab-grown clumps of neurons called organoids to reveal how three genes linked to autism affect the timing of brain development. ArticlePublished:12th Apr 2021The human brain, just like whatever you’re reading this on, uses electricity to function. Neurons are constantly sending and receiving electrical signals. Everyone’s brain works a bit differently, and scientists are now getting closer to establishing how electrical activity is functioning in individ… ArticlePublished:6th Feb 2022Powerful tricks from computer science and cybernetics show how evolution ‘hacked’ its way to intelligence from the bottom up. ArticlePublished:12th Apr 2021Two patients who had been paralyzed by spinal cord injuries were able to use the brain-computer interface to control a computer cursor in their homes. | |||

2.1.4Exogenous cognition | |||

ArticlePublished:7th Sep 2022Brain electrodes designed to mimic the hippocampus appear to boost the encoding of memories—and are twice as effective in people with poor memory. ArticlePublished:5th Oct 2020Encoding memories in engram cells is controlled by large-scale remodeling of the proteins and DNA that make up cells’ chromatin, according to an MIT study. This chromatin remodeling, which allows specific genes involved in storing memories to become more active, takes place in multiple stages spread… ArticlePublished:26th May 2021Findings could provide important insights into human conditions such as PTSD, anxiety. ArticlePublished:22nd Sep 2022Memory retention gets worse with age. For people with brain injuries, such as from a stroke or physical trauma to the brain, the impairment can be utterly debilitating. The central idea is simple: replicate the hippocampus’ signals with a digital replacement. It’s no easy task. | |||

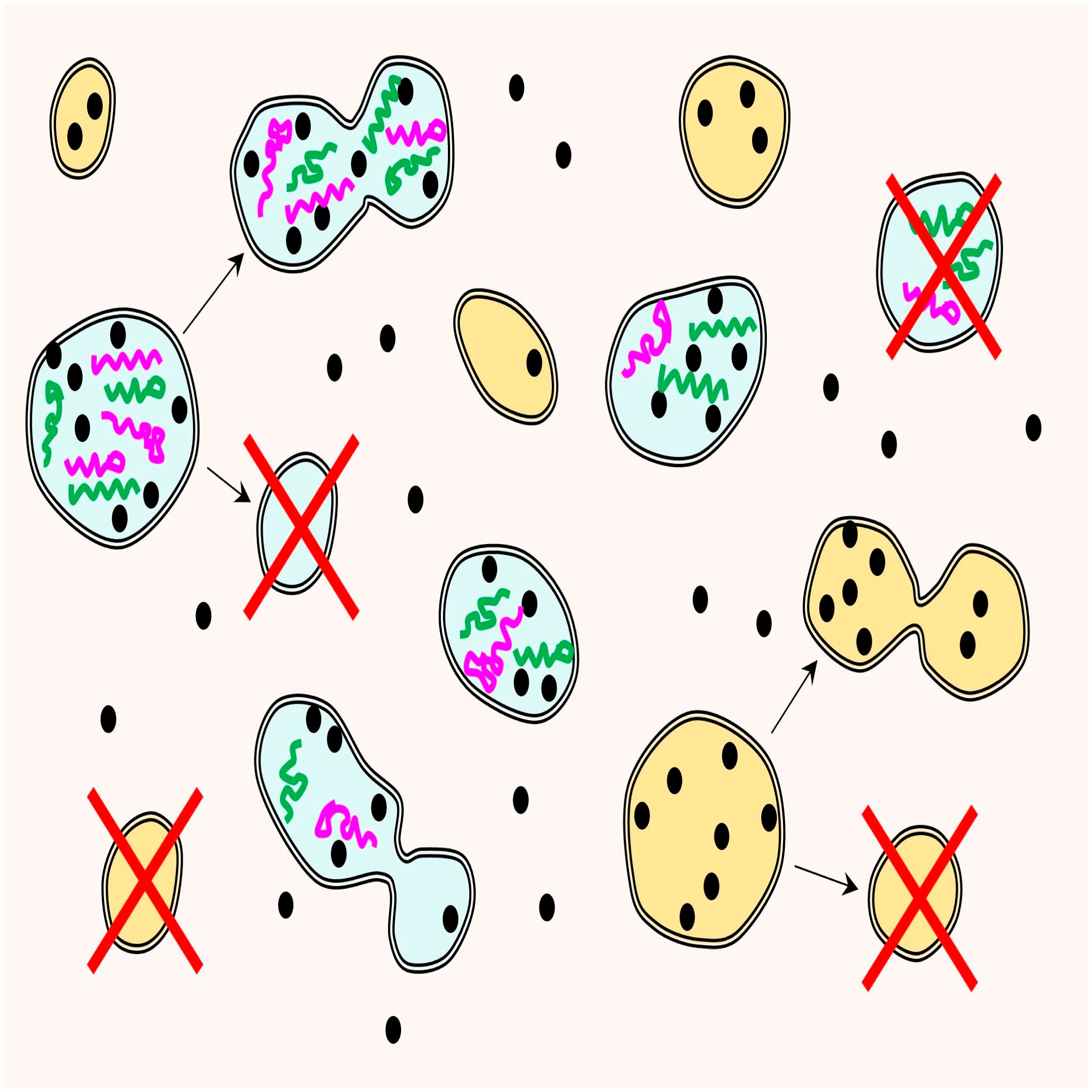

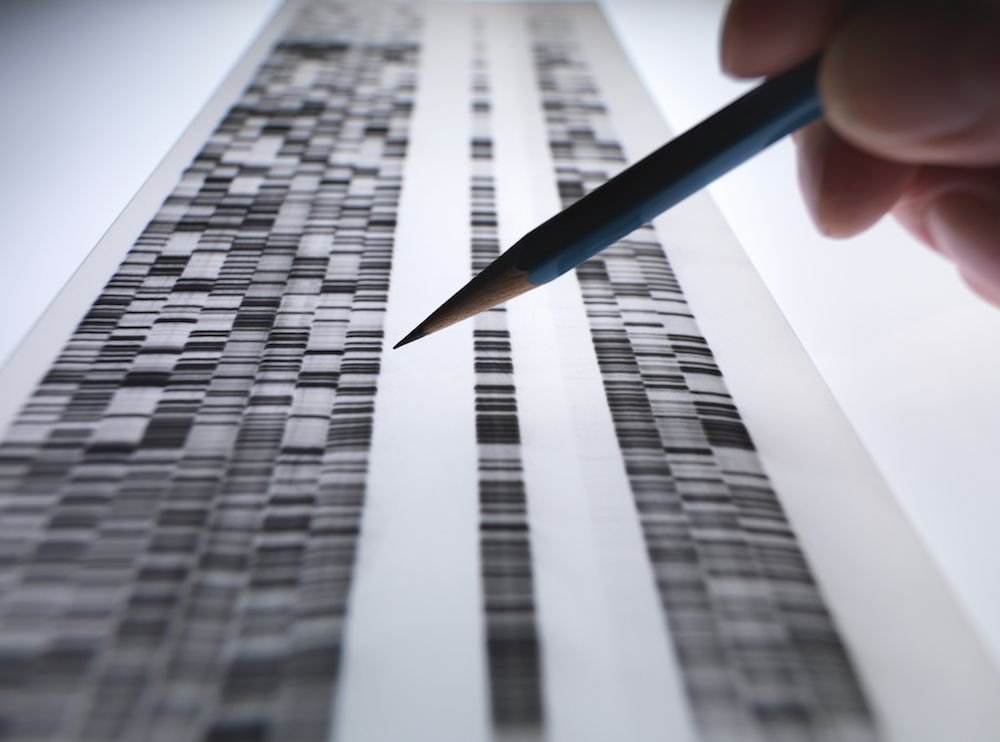

ArticlePublished:6th Jan 2021Gene therapy using “base editor” derived from CRISPR extends life span, preserved aortas in mice with progeria ArticlePublished:21st Jun 2021This vision describes the most compelling research priorities and opportunities in human genomics for the coming decade, signaling a new era in genomics for NHGRI and the field. ArticlePublished:8th Jun 2021Mechanisms of many human chronic diseases involve abnormal action of the immune system and/or altered metabolism. The microbiome, an important regulator of metabolic and immune-related phenotypes, has been shown to be associated with or participate in the development of a variety of chronic diseases… ArticlePublished:6th Jun 2022Instead of deleting genes, epigenetic editing modulates their activity. A new paper tests if it’s able to undo a genetic effect of early alcohol exposure. ArticlePublished:13th Mar 2019Eric Lander, Françoise Baylis, Feng Zhang, Emmanuelle Charpentier, Paul Berg and specialists from seven countries call for an international governance framework. ArticlePublished:22nd Sep 2022So far CRISPR has focused on tissues — liver and blood cells — easy for bioengineers to reach. Researchers will have to figure out ways to reach complex areas like muscle safely and efficiently. ArticlePublished:31st May 2022MIT McGovern Institute neuroscientists expand the CRISPR toolkit with a new, compact Cas7-11 enzyme, making it small enough to fit into a single viral vector for therapeutic applications. ArticlePublished:25th Jun 2020Three studies showing large DNA deletions and reshuffling heighten safety concerns about heritable genome editing ArticlePublished:30th Jun 2022The gene-editing technology has led to innovations in medicine, evolution and agriculture — and raised profound ethical questions about altering human DNA. ArticlePublished:18th May 2021The CRISPR/Cas technology has revolutionized the field of genome editing. The technology has been widely adopted in research and is leading to the development of a wide range of applications. But how do we deal with new technologies like CRISPR as a society? Is CRISPR the new golden standard in soma… ArticlePublished:8th Sep 2022A mutation present in modern humans seems to drive greater neuron growth than does an ancient hominin version. ArticlePublished:7th Aug 2021The advent of new genome editing technologies such as CRISPR/CasX has opened new dimensions of what and how genetic interventions into our world are possible. This Opinion addresses the profound ethical questions raised and revived by them. ArticlePublished:3rd Sep 2020Nearly two years after the birth of the first “CRISPR babies,” an international group of experts warned such human experimentation should not be conducted. ArticlePublished:28th Jun 2023Fanzor is shown to be an RNA-guided DNA endonuclease, demonstrating that such endonucleases are found in all domains of life and indicating a potential new tool for genome engineering applications. ArticlePublished:7th Aug 2021This collection of essays is the first written product of The Hastings Center's Initiative in Bioethics and the Humanities. This new initiative, which we created with generous support from the National Endowment for the Humanities and private donors, has three aims. The first is to bring together insights from the humanities and the sciences to advance public conversation about the ancient question, how should we live? The second aim is to cultivate a habit of thinking that increases our chances of talking with, rather than past, others as we attempt to engage in that conversation. The third is to support young scholars in the humanities who seek to promote truly integrative and open-minded public conversation regarding an ethical matter about which they care deeply. ArticlePublished:16th Jun 2022John Leonard built Intellia Therapeutics with Jennifer Doudna, the Nobel Prize-winning scientist who pioneered gene editing technology. Intellia has figured out how to alter disease-causing genes inside patients, but must first cure itself of financial ills. ArticlePublished:31st May 2022After years of disappointment, gene-therapy research has undergone a renaissance, with several high-profile drug approvals and a string of promising clinical-trial results against devastating genetic diseases, including sickle-cell disease and some blood cancers. ArticlePublished:15th Mar 2023Mouse induced pluripotent stem cells derived from differentiated fibroblasts could be converted from male (XY) to female (XX), resulting in cells that could form oocytes and give rise to offspring after fertilization. ArticlePublished:9th May 2022A new generation of lipid nanoparticles — able to reach the heart, the lung, the brain — would enable the use of gene editing to treat common ailments such as heart disease or Alzheimer’s. ArticlePublished:28th Sep 2019Abstract. Genetic engineering opens new possibilities for biomedical enhancement requiring ethical, societal and practical considerations to evaluate its implic ArticlePublished:4th Jan 2021Genomics professionals and the general public have a responsibility to bridge the gap between science and society. The general public has a responsibility to deliberate, as their choices not only impact themselves but also shape society. Conversely, genomics professionals have a responsibility to en… ArticlePublished:6th May 2022Samira Kiani is a professor of genetic engineering at the University of Pittsburgh, and co-producer of 'Make People Better'. The advance of genetic engineering technology is inevitable, but it is not inevitably evil. To ensure that, we need a new vision for how science is practiced ArticlePublished:9th May 2022The Non-Communicable Diseases Genetic Heritage Study (NCD-GHS) is a consortium to help produce a comprehensive catalog of human genetic variation in Nigeria and assess the burden and etiological characteristics of non-communicable diseases in 100,000 adults. ArticlePublished:7th Aug 2021In September 2020, a detailed report on Heritable Human Genome Editing was published. The report offers a translational pathway for the limited approval of germline editing under limited circumstances and assuming various criteria have been met. In this perspective, some three dozen experts from the fields of genome editing, medicine, bioethics, law, and related fields offer their candid reactions to the National Academies/Royal Society report, highlighting areas of support, omissions, disagreements, and priorities moving forward. ArticlePublished:21st Oct 2019A new DNA-editing technique called prime editing offers improved versatility and efficiency with reduced byproducts compared with existing techniques, and shows potential for correcting disease-associated mutations. ArticlePublished:1st Jun 2021The ethical debate about what is now called human gene editing (HGE) has gone on for more than 50 y. For nearly that entire time, there has been consensus that a moral divide exists between somatic and germline HGE. Conceptualizing this divide as a barrier on a slippery slope, in this paper, I first… ArticlePublished:7th Aug 2021ArticlePublished:26th Sep 2023ArticlePublished:20th Apr 2022The Human Pangenome Reference Consortium aims to offer the highest quality and most complete human pangenome reference that provides diverse genomic representation across human populations. ArticlePublished:21st Jul 2022The Tabula Sapiens has revealed discoveries relating to shared behavior and subtle, organ-specific differences across cell types. ArticlePublished:22nd Sep 2022The technique had largely been limited to editing patients’ cells in the lab. New research shows promise for treating diseases more directly. ArticlePublished:28th Jan 2021In the past year, there has been more CRISPR news than the awarding of the Nobel Prize in Chemistry to Jennifer Doudna and Emmanuelle Charpentier. Gene editing has continued its forward momentum in major ways, and scientists are beginning to see results. ArticlePublished:7th Aug 2021Engineering synthetic cells from the bottom up is expected to revolutionize biotechnology. How can synthetic cells support societal transitions necess… | |||

2.2.1Diagnostics | |||

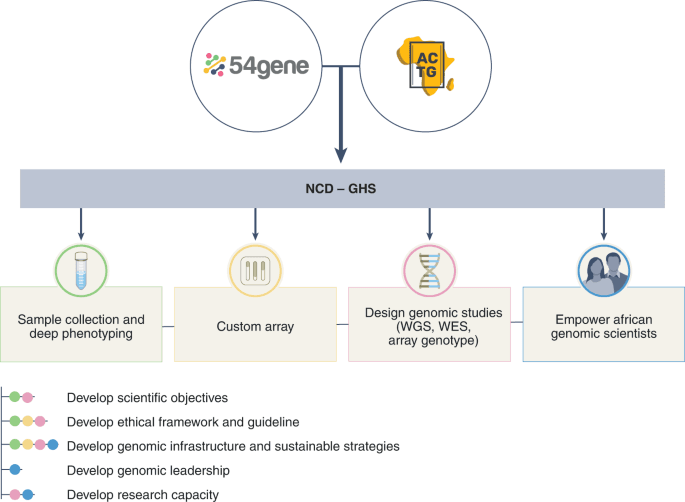

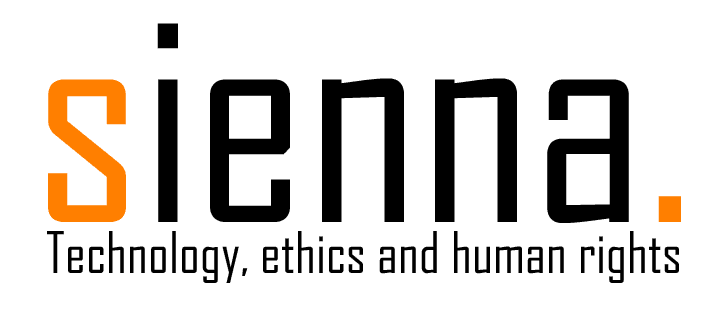

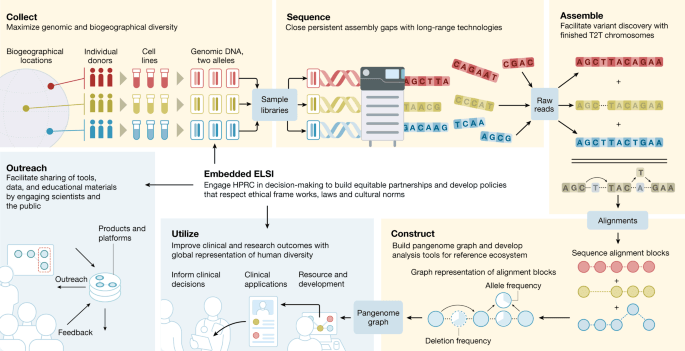

ArticlePublished:18th Jan 2021An international collaboration project to develop and implement novel genome-based disease prediction tools has received over 10 million euros from the... ArticlePublished:9th May 2022The Non-Communicable Diseases Genetic Heritage Study (NCD-GHS) is a consortium to help produce a comprehensive catalog of human genetic variation in Nigeria and assess the burden and etiological characteristics of non-communicable diseases in 100,000 adults. ArticlePublished:20th Apr 2022The Human Pangenome Reference Consortium aims to offer the highest quality and most complete human pangenome reference that provides diverse genomic representation across human populations. ArticlePublished:21st Jul 2022The Tabula Sapiens has revealed discoveries relating to shared behavior and subtle, organ-specific differences across cell types. | |||

2.2.2Next generation editors and delivery | |||

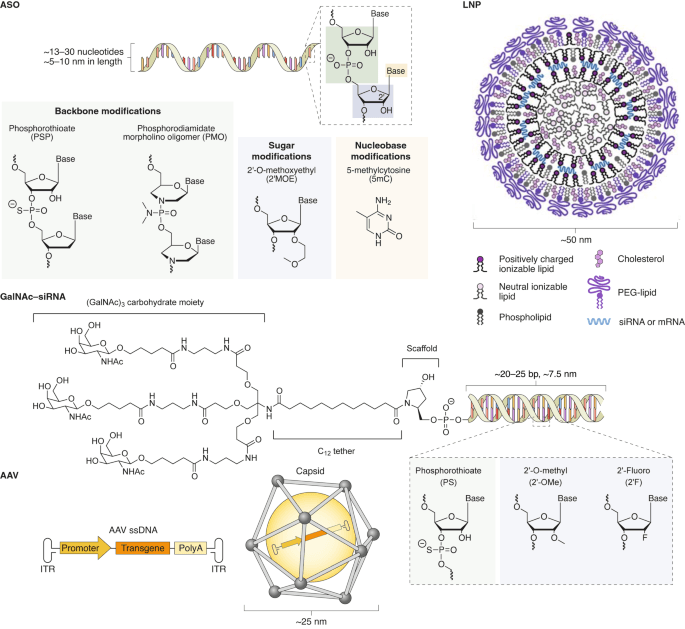

ArticlePublished:31st May 2022After years of disappointment, gene-therapy research has undergone a renaissance, with several high-profile drug approvals and a string of promising clinical-trial results against devastating genetic diseases, including sickle-cell disease and some blood cancers. ArticlePublished:24th May 2021The first successful clinical test of a technique called optogenetics has allowed a person to see for the first time in decades, with the help of image-enhancing goggles. ArticlePublished:6th May 2022Samira Kiani is a professor of genetic engineering at the University of Pittsburgh, and co-producer of 'Make People Better'. The advance of genetic engineering technology is inevitable, but it is not inevitably evil. To ensure that, we need a new vision for how science is practiced ArticlePublished:31st May 2021This Review provides an overview of four platform technologies that are currently used in the clinic for delivery of nucleic acid therapeutics, describing their properties, discussing technical advancements that led to clinical approval, and highlighting examples of approved genetic drugs that make … ArticlePublished:28th Jan 2021In the past year, there has been more CRISPR news than the awarding of the Nobel Prize in Chemistry to Jennifer Doudna and Emmanuelle Charpentier. Gene editing has continued its forward momentum in major ways, and scientists are beginning to see results. | |||

2.2.3Engineered organism and AI-based tools | |||

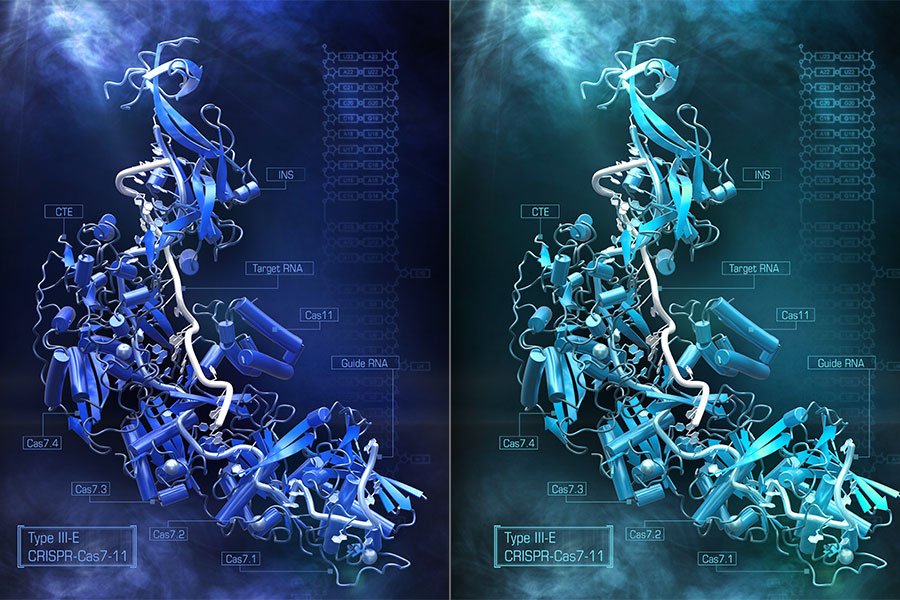

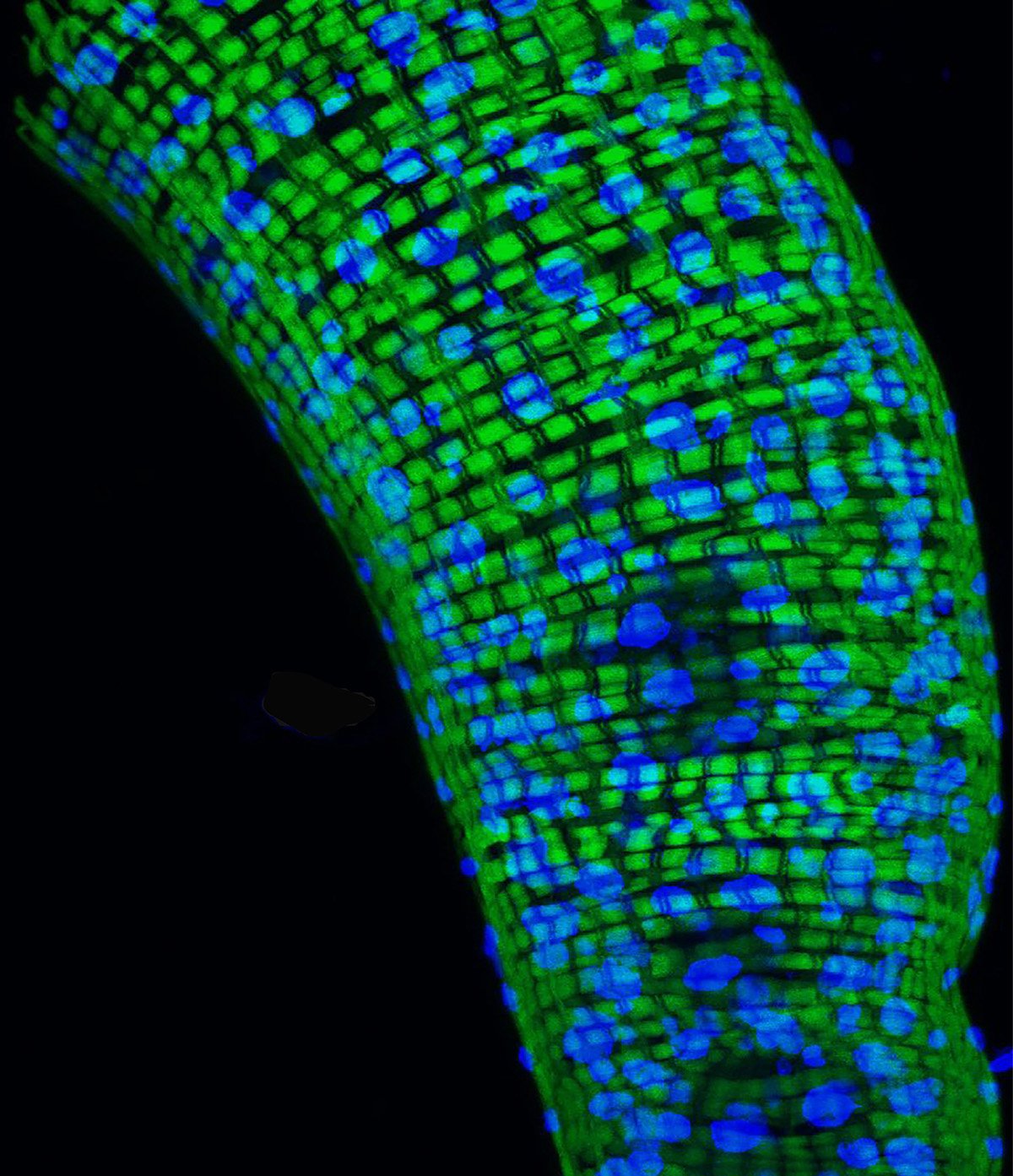

ArticlePublished:22nd Jun 2021A change of instructions in a computer program directs the computer to execute a different command. Similarly, synthetic biologists are learning the rules for how to direct the activities of human cells. ArticlePublished:31st May 2022MIT McGovern Institute neuroscientists expand the CRISPR toolkit with a new, compact Cas7-11 enzyme, making it small enough to fit into a single viral vector for therapeutic applications. ArticlePublished:9th May 2022A new generation of lipid nanoparticles — able to reach the heart, the lung, the brain — would enable the use of gene editing to treat common ailments such as heart disease or Alzheimer’s. ArticlePublished:11th Feb 2021Scientists at Dyno Therapeutics, Google Research, and Harvard used machine learning models to identify AAV2 capsids for gene therapy. | |||

2.2.4Alternatives to direct gene editing | |||

ArticlePublished:11th Feb 2021Organoids that contain an ancient version of a gene that influences brain development are smaller and bumpier than those with human genes. ArticlePublished:29th Apr 2021In Greek mythology, the Chimera was a fearsome, fire-breathing monster with a lion’s head, a goat’s body, and a dragon’s tail. She terrorized the Lycians until felled by Pegasus, the winged horse. The Chimeric beast lives on in people’s imaginations, her name becoming synonymous with grotesque monst… ArticlePublished:27th Aug 2021Engineering synthetic cells from the bottom up is expected to revolutionize biotechnology. How can synthetic cells support societal transitions necess… | |||

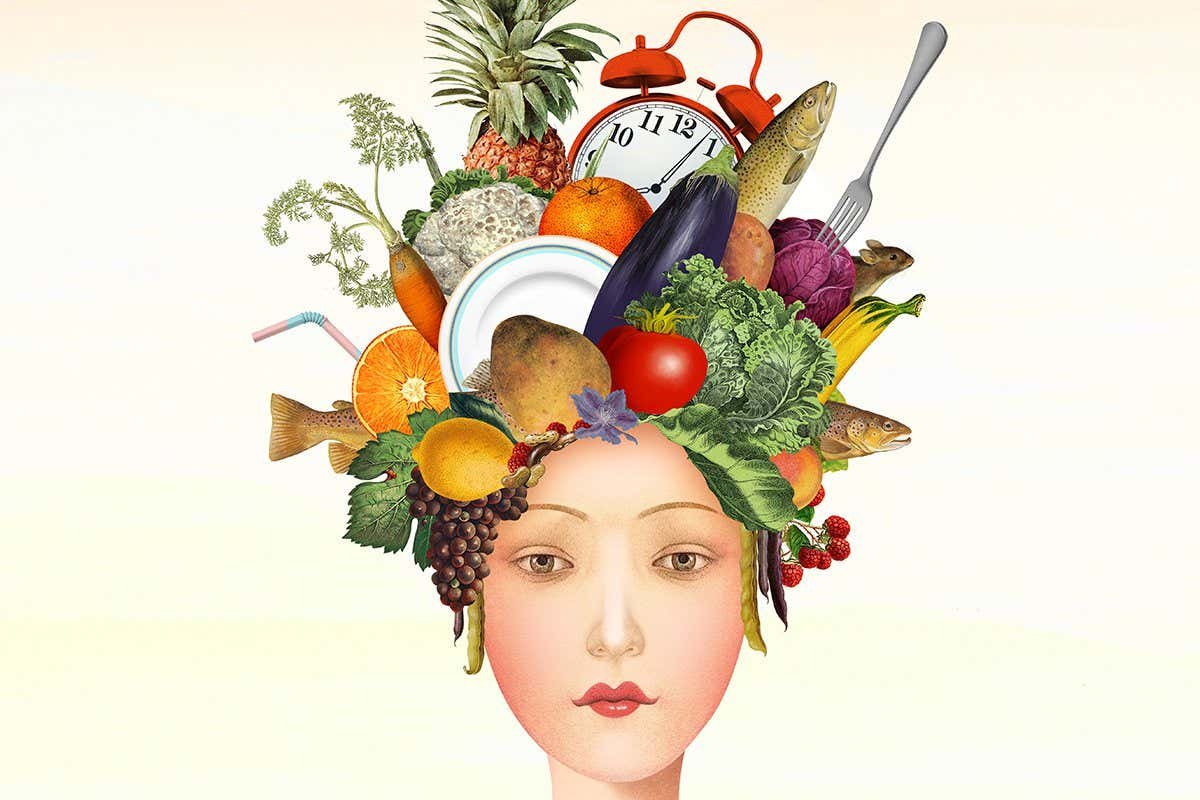

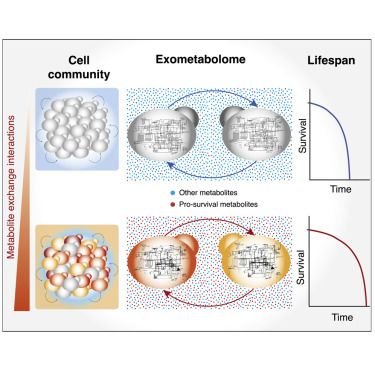

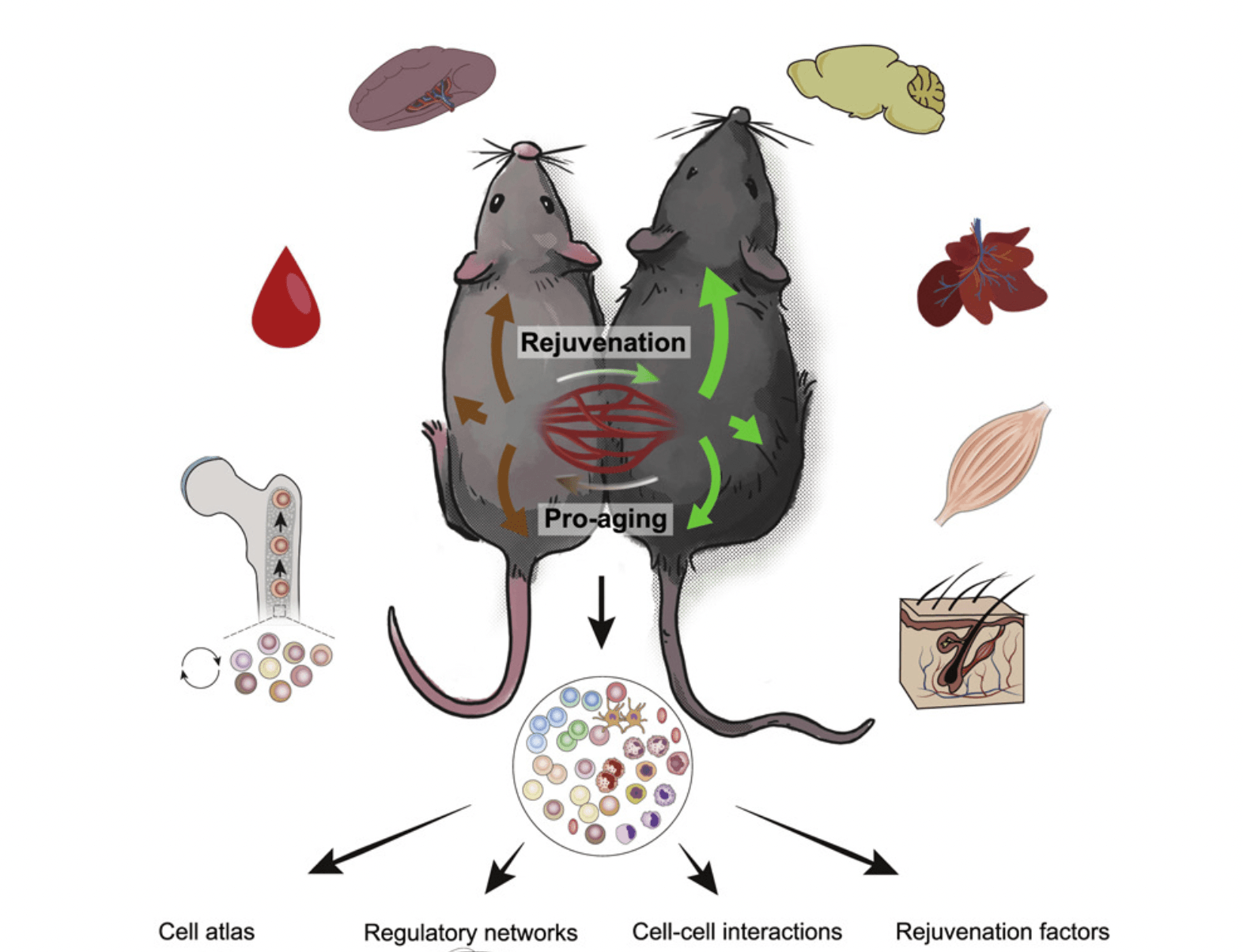

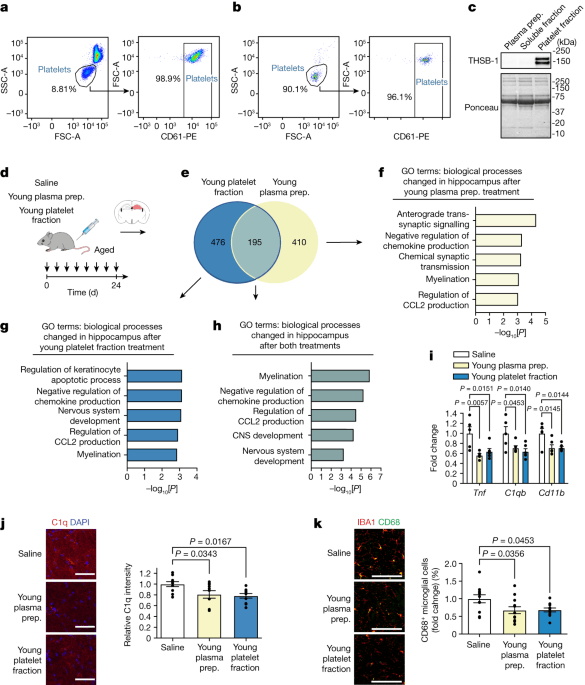

ArticlePublished:20th Jul 2022A new diet based on research into the body’s ageing process suggests you can increase your life expectancy by up to 20 years by changing what, when and how much you eat. ArticlePublished:7th Aug 2021Die Nationale Akademie der Wissenschaften Leopoldina berät Politik und Gesellschaft unabhängig zu wichtigen Zukunftsthemen. ArticlePublished:1st Jan 2023Metabolism is deeply intertwined with aging. Effects of metabolic interventions on aging have been explained with intracellular metabolism, growth control, and signaling. Studying chronological aging in yeast, we reveal a so far overlooked metabolic property that influences aging via the exchange of… ArticlePublished:3rd Apr 2022Weil die Forschung bei altersbedingten Krankheiten wie Alzheimer, Parkinson oder Herz-Kreislauf-Leiden kaum vorwärtskommt, wollen Wissenschafter das Älterwerden an sich bekämpfen. Wer arbeitet an welchem Durchbruch? ArticlePublished:8th Jul 2022New insight into how we age suggests it may be driven by a failure to switch off the forces that build our bodies. If true, it could lead to a deeper understanding of ageing – and the possibility of slowing it ArticlePublished:17th Jun 2021Editing the epigenome, which turns our genes on and off, could be the “elixir of life.” ArticlePublished:17th Apr 2022The young circulatory milieu capable of delaying aging in individual tissues is of interest as rejuvenation strategies, but how it achieves cellular- and systemic-level effects has remained unclear. ArticlePublished:21st Jul 2021New research is intensifying the debate — with profound implications for the future of the planet. ArticlePublished:23rd Aug 2023Abundant high-molecular-mass hyaluronic acid (HMM-HA) contributes to cancer resistance and possibly to the longevity of the longest-lived rodent-the naked mole-rat1,2. To study whether the benefits of HMM-HA could be transferred to other animal species, we generated a transgenic mouse ove… ArticlePublished:12th May 2021Data collection and analysis earn top spots among factors that have drastically increased life expectancy ArticlePublished:4th Dec 2015Nicotinamide adenine dinucleotide (NAD+) is a coenzyme found in all living cells. It serves both as a critical coenzyme for enzymes that fuel reduction-oxidation reactions, carrying electrons from one reaction to another, and as a cosubstrate for other enzymes such as the sirtuins and poly(adenosine… ArticlePublished:16th Aug 2023Platelet factors transfer the benefits of young blood to the ageing brain in mice through CXCR3, which mediates the cellular, molecular and cognitive benefits of systemic PF4 on the aged brain. ArticlePublished:28th Jan 2021eLife is publishing a special issue on aging, geroscience and longevity to mark the rapid progress made in this field over the past decade, both in terms of mechanistic understanding and translational approaches that are poised to have clinical impact on age-related diseases. ArticlePublished:20th May 2022Some older athletes may owe their high performance to their mitochondria. The cells of these individuals produce different levels of more than 800 types of proteins than those of sedentary seniors, muscle biopsies reveal. ArticlePublished:28th Apr 2022A new approach helps to assess the impact of accelerated epigenetic aging on the risk of cancer. ArticlePublished:16th Mar 2022Packages of RNA and proteins that bud off from cells have reversed some signs of ageing in mice, and they may account for the rejuvenating effects of young blood | |||

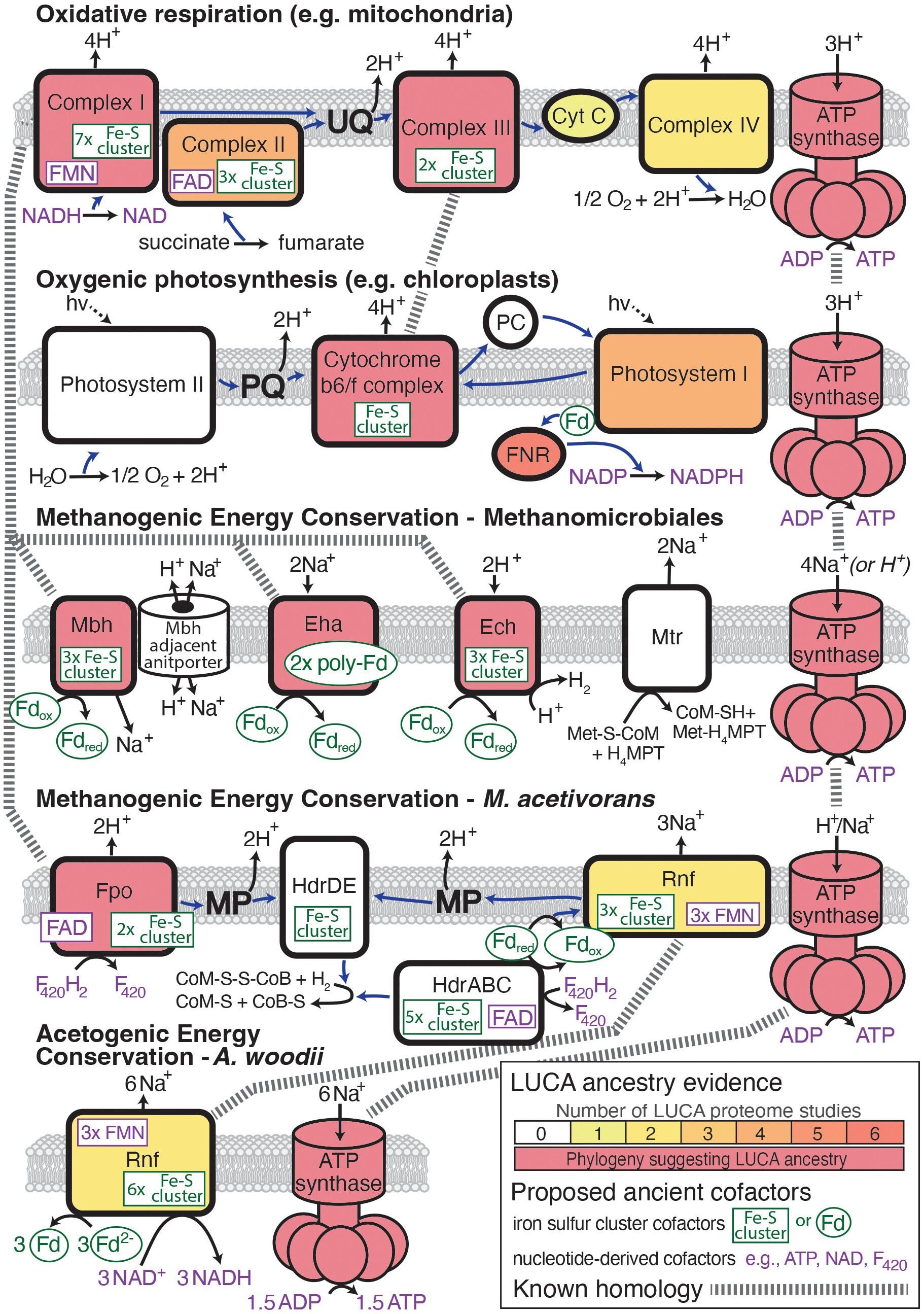

2.3.1Fundamental Geroscience | |||

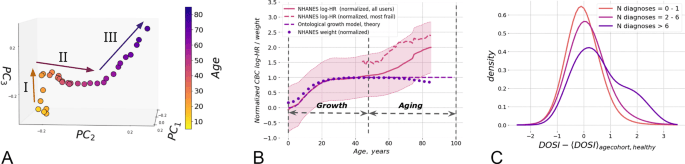

ArticlePublished:2nd Nov 2020In a study published in the journal Nature Metabolism, researchers from Bar-Ilan University in Israel report evidence that supports, for the first time, a longstanding theory on the aging process in cells. Using a novel approach from physics, they developed a computational method that quantif… ArticlePublished:25th May 2021Aging is associated with an increased risk of chronic diseases and functional decline. Here, the authors investigate the fluctuations of physiological indices along aging trajectories and observed a characteristic decrease in the organism state recovery rate. ArticlePublished:20th May 2022Some older athletes may owe their high performance to their mitochondria. The cells of these individuals produce different levels of more than 800 types of proteins than those of sedentary seniors, muscle biopsies reveal. ArticlePublished:24th Jun 2021NIH scientists discover that bacteria may drive activity of many hallmark aging genes in flies. | |||

2.3.2Diagnostics, hallmarks and biomarkers | |||

ArticlePublished:8th Jul 2022New insight into how we age suggests it may be driven by a failure to switch off the forces that build our bodies. If true, it could lead to a deeper understanding of ageing – and the possibility of slowing it ArticlePublished:28th Jun 2021A Harvard team has shown mouse embryos reset their age to zero a week into development. The study could lead to breakthroughs in longevity science. ArticlePublished:28th Apr 2022A new approach helps to assess the impact of accelerated epigenetic aging on the risk of cancer. ArticlePublished:7th Jun 2021The microscopic animals were frozen when woolly mammoths still roamed the planet, but were restored as though no time had passed. | |||

2.3.3Healthspan therapies and interventions | |||

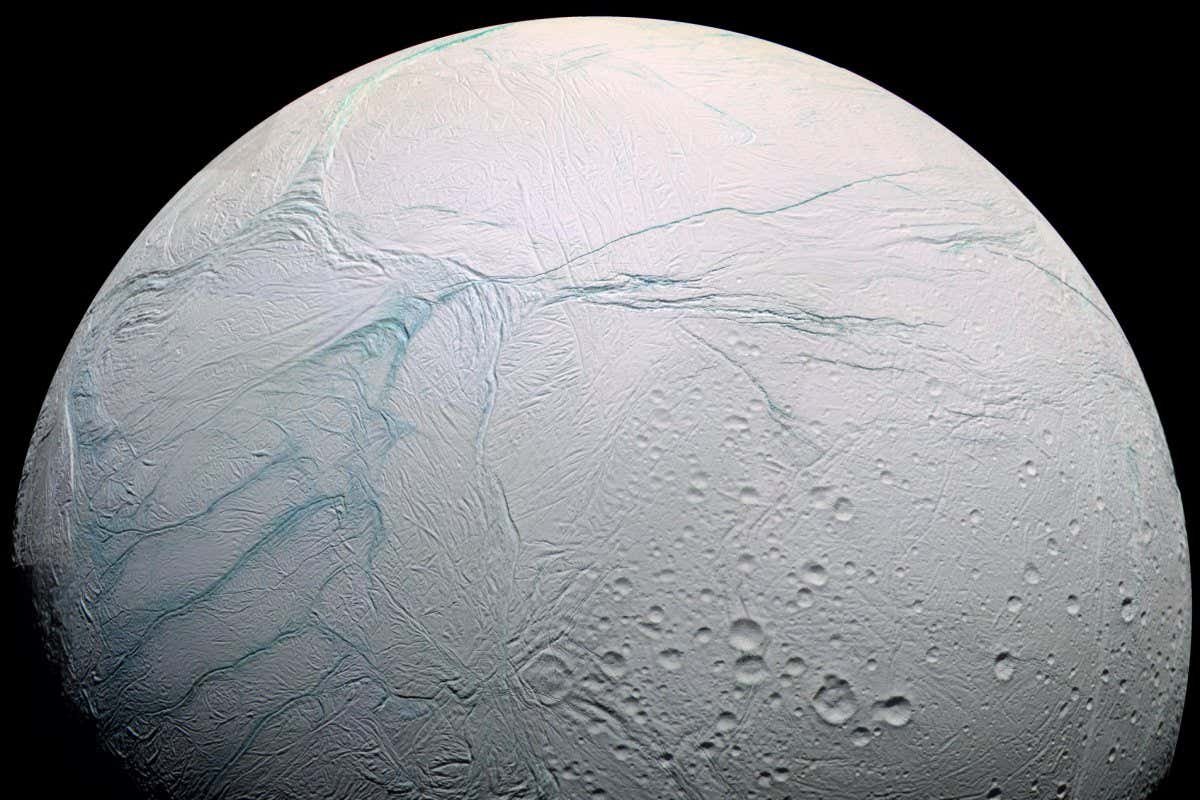

ArticlePublished:20th Jul 2022A new diet based on research into the body’s ageing process suggests you can increase your life expectancy by up to 20 years by changing what, when and how much you eat. ArticlePublished:8th Apr 2021Biotech startups are trying to hack the process of aging and, in the process, stave off the most devastating diseases ArticlePublished:16th Mar 2021A key sign of ageing slows right down when ground squirrels are hibernating. This suggests we might be able to induce similar changes to put humans in suspended animation for long-distance space travel ArticlePublished:16th Mar 2022Packages of RNA and proteins that bud off from cells have reversed some signs of ageing in mice, and they may account for the rejuvenating effects of young blood | |||

2.3.4Lifespan extension and rejuvenation | |||

ArticlePublished:18th Jun 2021Research suggests humans cannot slow the rate at which they get older because of biological constraints ArticlePublished:5th Sep 2019In a small trial, a cocktail of drugs seemed to rejuvenate the body’s ‘epigenetic clock’. ArticlePublished:3rd Apr 2022Weil die Forschung bei altersbedingten Krankheiten wie Alzheimer, Parkinson oder Herz-Kreislauf-Leiden kaum vorwärtskommt, wollen Wissenschafter das Älterwerden an sich bekämpfen. Wer arbeitet an welchem Durchbruch? ArticlePublished:17th Apr 2022The young circulatory milieu capable of delaying aging in individual tissues is of interest as rejuvenation strategies, but how it achieves cellular- and systemic-level effects has remained unclear. ArticlePublished:2nd Dec 2020‘Reprogramming’ approach seems to make old cells young again. | |||

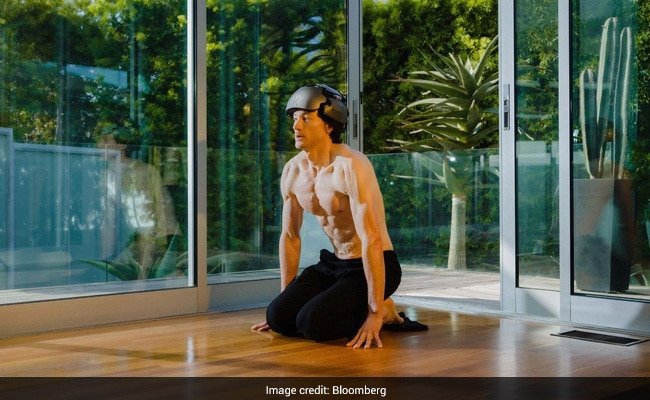

ArticlePublished:17th Jun 2021Over the next few weeks, a company called Kernel will begin sending dozens of customers across the U.S. a $50,000 helmet that can, crudely speaking, read their mind. ArticlePublished:26th Jun 2023A brain scientist and a philosopher have resolved a wager on consciousness that was made when Bill Clinton was president ArticlePublished:24th May 2022Although there is widespread agreement that consciousness exists, little else is consensual about the phenomenon. ArticlePublished:20th May 2022This paper examines consciousness from the perspective of theoretical computer science (TCS), a branch of mathematics concerned with understanding the underlying principles of computation and complexity, including the implications and surprising consequences of resource limitations. ArticlePublished:6th Apr 2022MRI data from more than 100 studies have been aggregated to yield new insights about brain development and ageing, and create an interactive open resource for comparison of brain structures throughout the human lifespan, including those associated with neurological and psychiatric disorders. ArticlePublished:24th Jun 2020Electrical signals coming from your heart and other organs influence how you perceive the world, the decisions you take, your sense of who you are and consciousness itself. ArticlePublished:18th Mar 2022We tested the hypothesis that deep brain stimulation (DBS) of central thalamus might restore both arousal and awareness following consciousness loss. ArticlePublished:9th Dec 2020This commentary was first published by S. Rajaratnam School of International Studies, Nanyang Technological University, Singapore ArticlePublished:26th Jul 2016Human enhancement is at least as old as human civilization. People have been trying to enhance their physical and mental capabilities for thousands of years, sometimes successfully – and sometimes with inconclusive, comic and even tragic results. ArticlePublished:14th Mar 2021Experience is in unexpected places, including in all animals, large and small, and perhaps even in brute matter itself. ArticlePublished:3rd Jun 2021Consciousness is sometimes referred to as ‘the ghost’ in the machinery of our brain. Is it time we gave up the ghost to focus on the machine? ArticlePublished:1st Sep 2021The neurorobotic fusion of touch, grip kinesthesia, and intuitive motor control promotes levels of behavioral performance that are stratified toward able-bodied function and away from standard-of-care prosthetic users. ArticlePublished:14th Jun 2023Psychedelics are a broad class of drugs defined by their ability to induce an altered state of consciousness1,2. These drugs have been used for millennia in both spiritual and medicinal contexts, and a number of recent clinical successes have spurred a renewed interest in developing psych… ArticlePublished:10th May 2022In an industry-first, the company Synchron and Mount Sinai Hospital in New York have enrolled their first human patient in a U.S. clinical trial called COMMAND to evaluate an endovascular brain-computer interface (BCI) for patients with severe paralysis. ArticlePublished:4th May 2022Elon Musk’s Neuralink is overtaken by a rival in a race to regulatory review when Synchron Inc. enrolled the first patient in its U.S. clinical trial, putting the company’s implant on a path toward possible regulatory approval for wider use in people with paralysis. ArticlePublished:31st Mar 2022Ecological opportunities in the early Cenozoic favored larger, not smarter, mammals. How and why did mammals evolve large brain sizes relative to their body mass? ArticlePublished:3rd May 2022Various theories have been developed for the biological and physical basis of consciousness. Four prominent theoretical approaches to consciousness: higher-order theories, global workspace theories, re-entry and predictive processing theories and integrated information theory. ArticlePublished:11th May 2015What happens in the brains of people who go from being peaceable neighbours to slaughtering each other on a mass scale? ArticlePublished:2nd Jun 2021Six different teams from across the globe are uniting in a challenge to test our fundamental theories of consciousness. ArticlePublished:13th Sep 2022The human brain contains about 100 billion neurons (as many stars as could be counted in the Milky way) – one million times those contained in our cubic millimetre of mouse brain. | |||

2.4.1Consciousness assessment | |||

ArticlePublished:6th Apr 2022MRI data from more than 100 studies have been aggregated to yield new insights about brain development and ageing, and create an interactive open resource for comparison of brain structures throughout the human lifespan, including those associated with neurological and psychiatric disorders. ArticlePublished:9th Dec 2020This commentary was first published by S. Rajaratnam School of International Studies, Nanyang Technological University, Singapore ArticlePublished:31st Mar 2022Ecological opportunities in the early Cenozoic favored larger, not smarter, mammals. How and why did mammals evolve large brain sizes relative to their body mass? | |||

2.4.2Cognitive capacity enhancement | |||

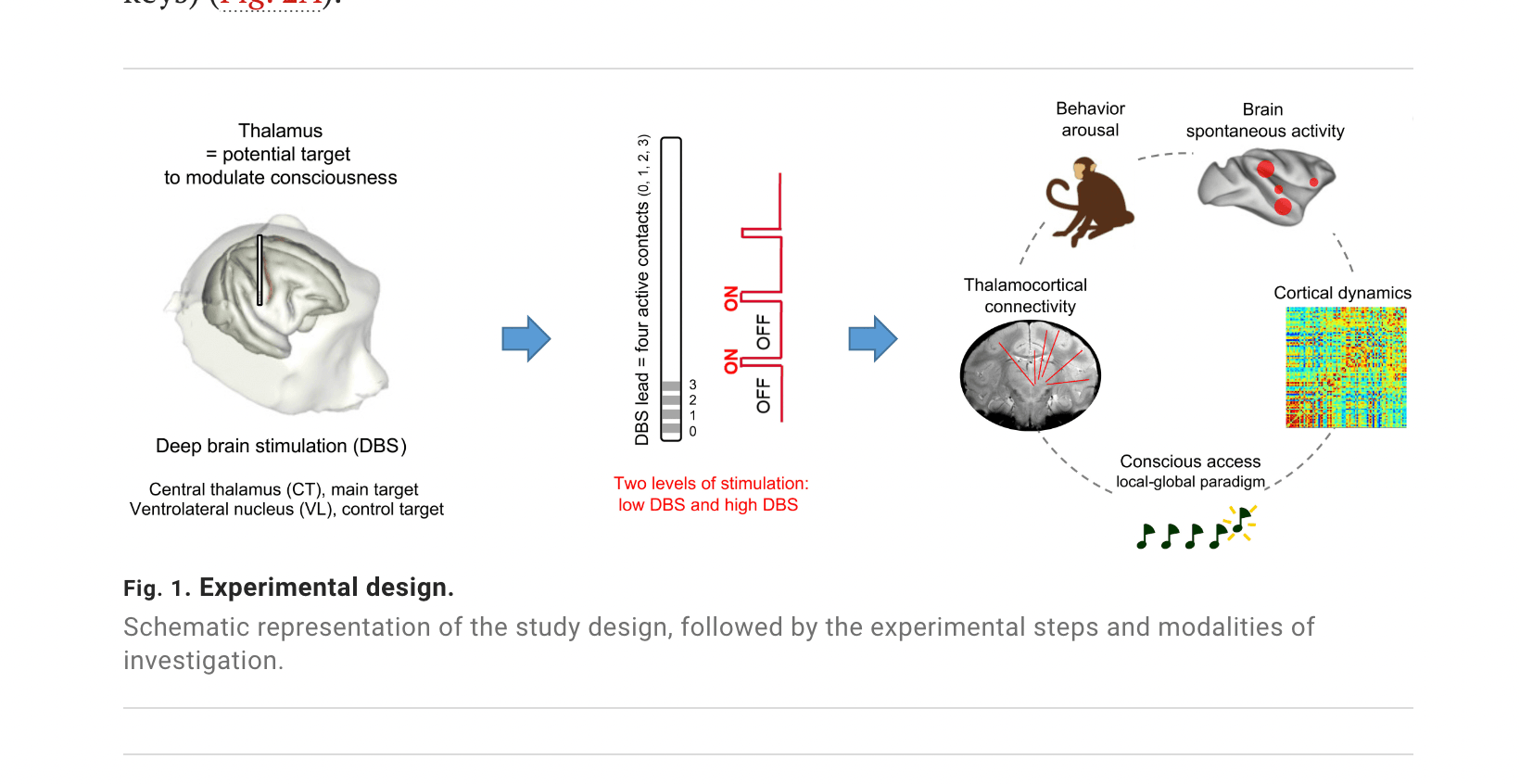

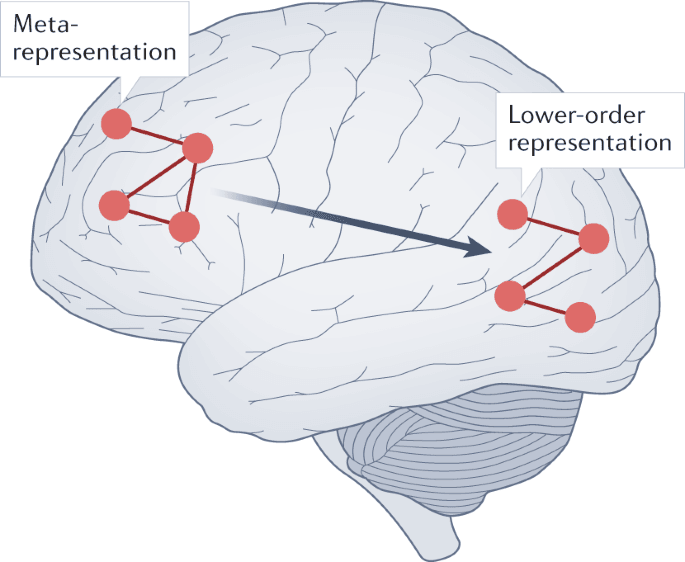

ArticlePublished:24th May 2022Although there is widespread agreement that consciousness exists, little else is consensual about the phenomenon. ArticlePublished:29th Mar 2021Neuroscience is bad at explaining what it’s like to be alive. Mark Solms thinks he can change that—with help from Freud, of all people. ArticlePublished:20th May 2022This paper examines consciousness from the perspective of theoretical computer science (TCS), a branch of mathematics concerned with understanding the underlying principles of computation and complexity, including the implications and surprising consequences of resource limitations. ArticlePublished:18th Mar 2022We tested the hypothesis that deep brain stimulation (DBS) of central thalamus might restore both arousal and awareness following consciousness loss. ArticlePublished:5th Apr 2021Instead of a code encrypted in the wiring of our neurons, could consciousness reside in the brain’s electromagnetic field? ArticlePublished:3rd May 2022Nature Reviews Neuroscience - Various theories have been developed for the biological and physical basis of consciousness. In this Review, Anil Seth and Tim Bayne discuss four prominent theoretical... ArticlePublished:3rd May 2022Various theories have been developed for the biological and physical basis of consciousness. Four prominent theoretical approaches to consciousness: higher-order theories, global workspace theories, re-entry and predictive processing theories and integrated information theory. ArticlePublished:2nd Jun 2021Six different teams from across the globe are uniting in a challenge to test our fundamental theories of consciousness. | |||

2.4.3Consciousness-augmenting interventions | |||

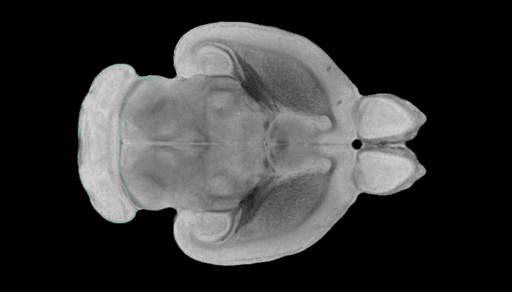

ArticlePublished:17th Jun 2021Over the next few weeks, a company called Kernel will begin sending dozens of customers across the U.S. a $50,000 helmet that can, crudely speaking, read their mind. ArticlePublished:24th Jan 2019The field of brain–computer interfaces (BCIs) has grown rapidly in the last few decades, allowing the development of ever faster and more reliable assistive technologies for converting brain activity into control signals for external devices for people with severe disabilities [...] ArticlePublished:4th Feb 2021A neuroinformatics expert and a quantum biophysicist are our guests on the podcast this week ArticlePublished:10th May 2022In an industry-first, the company Synchron and Mount Sinai Hospital in New York have enrolled their first human patient in a U.S. clinical trial called COMMAND to evaluate an endovascular brain-computer interface (BCI) for patients with severe paralysis. ArticlePublished:4th May 2022Elon Musk’s Neuralink is overtaken by a rival in a race to regulatory review when Synchron Inc. enrolled the first patient in its U.S. clinical trial, putting the company’s implant on a path toward possible regulatory approval for wider use in people with paralysis. ArticlePublished:11th May 2015What happens in the brains of people who go from being peaceable neighbours to slaughtering each other on a mass scale? ArticlePublished:13th Sep 2022The human brain contains about 100 billion neurons (as many stars as could be counted in the Milky way) – one million times those contained in our cubic millimetre of mouse brain. | |||

2.4.4Beyond-human consciousness | |||

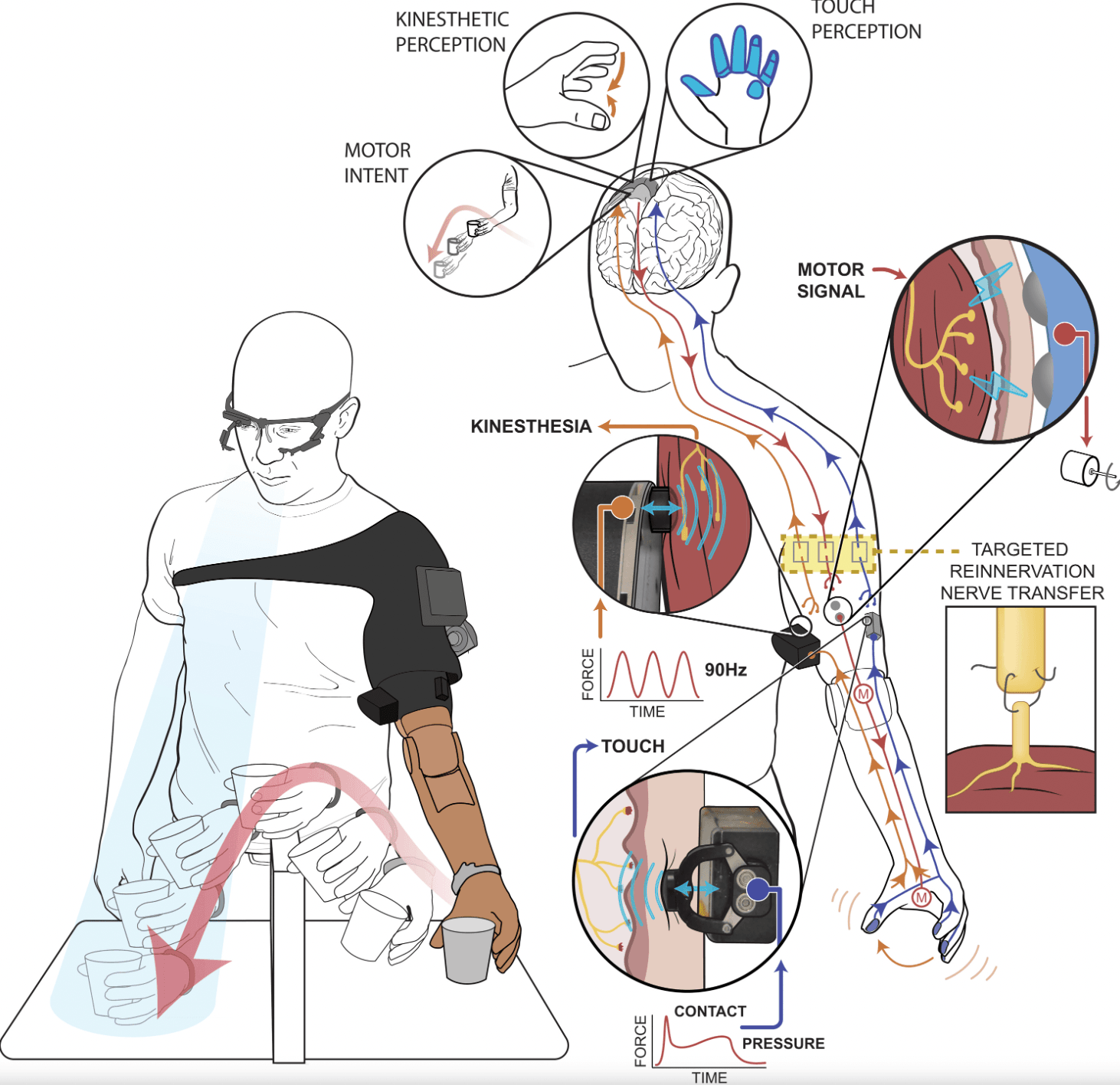

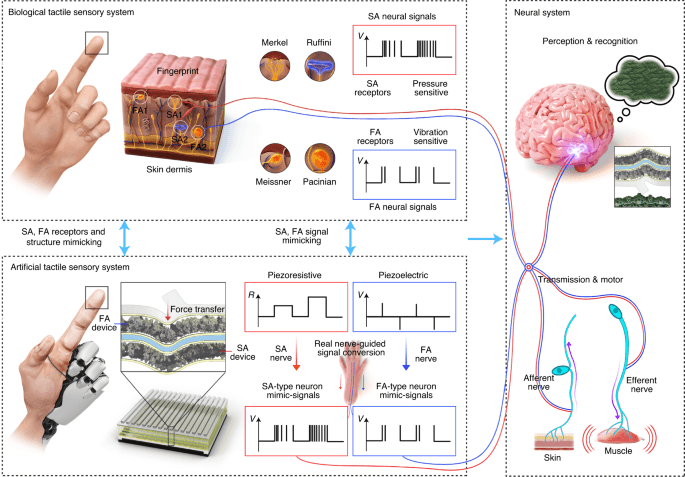

ArticlePublished:3rd Jun 2021A tactile sensing system that can learn to identify different types of surface can be created using sensors that mimic the fast and slow responses of mechanoreceptors found in human skin. ArticlePublished:10th Feb 2021EPFL researchers have identified specific neurons that help activate sensory processing in nearby nerve cells — a finding that could explain how the brain integrates signals necessary for tactile perception and learning. ArticlePublished:1st Sep 2021The neurorobotic fusion of touch, grip kinesthesia, and intuitive motor control promotes levels of behavioral performance that are stratified toward able-bodied function and away from standard-of-care prosthetic users. | |||

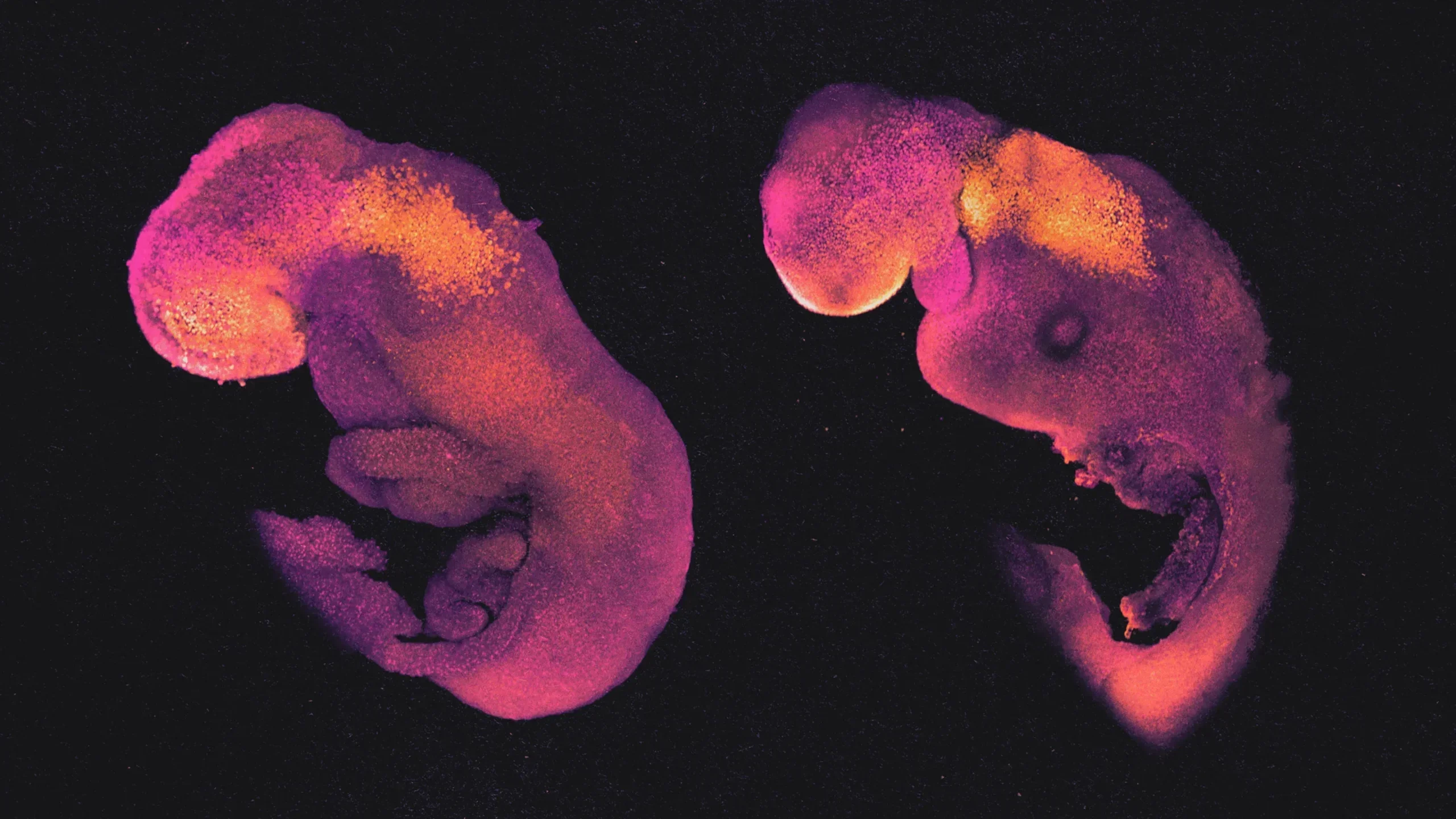

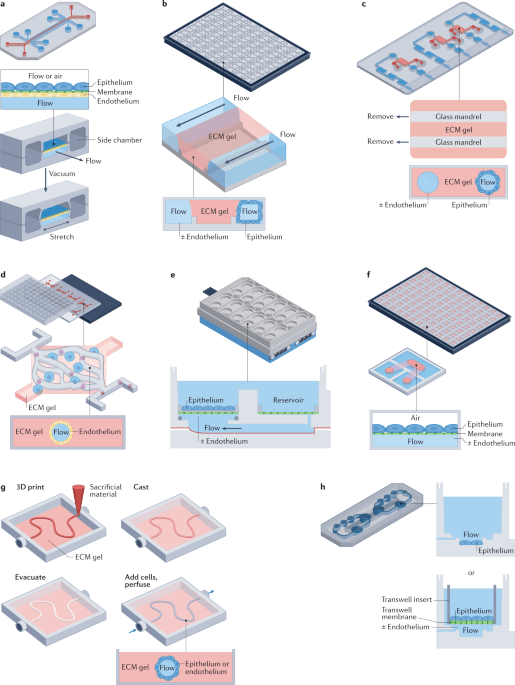

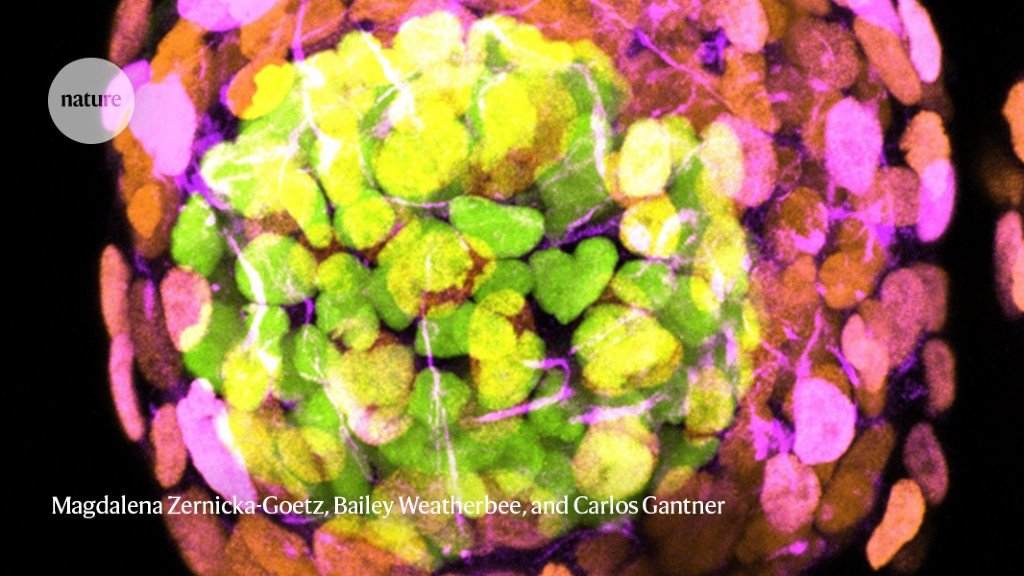

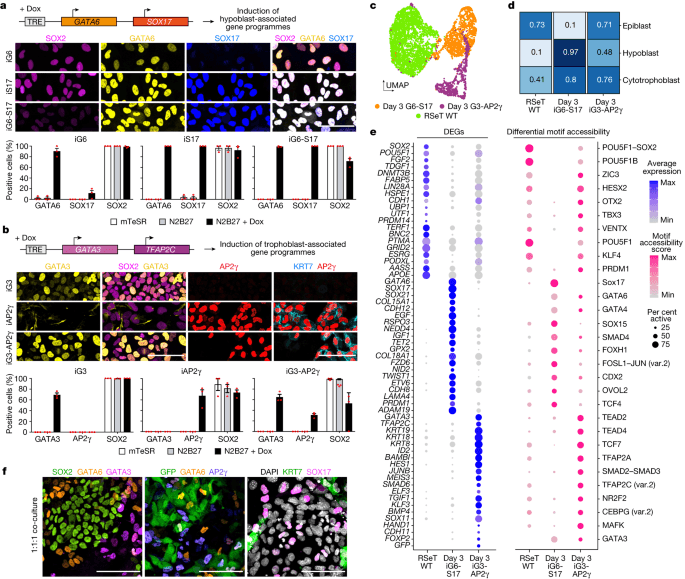

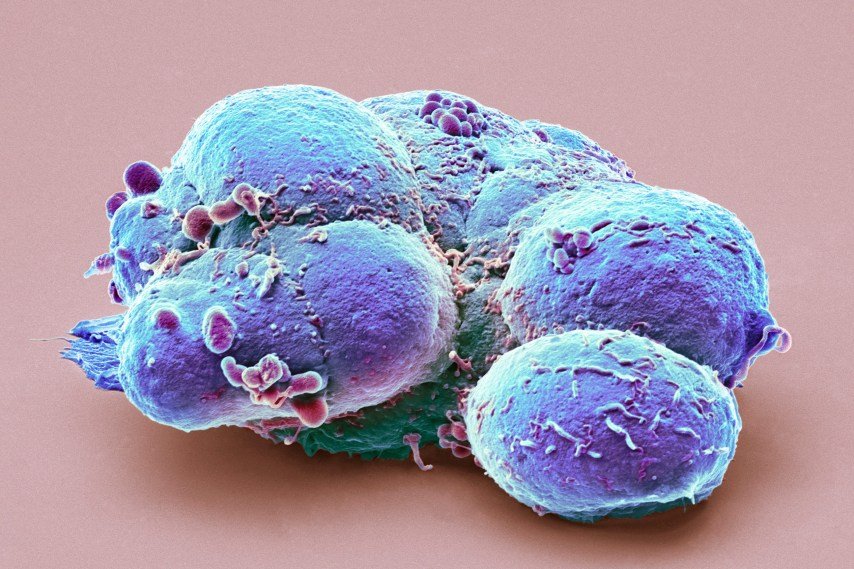

ArticlePublished:21st Feb 2022Harvard researchers used lab-grown clumps of neurons called organoids to reveal how three genes linked to autism affect the timing of brain development. ArticlePublished:26th Sep 2023ArticlePublished:23rd Sep 2022Miniature organoids are being implanted into animals. Humans are next. ArticlePublished:25th Mar 2022This Review discusses the types of single and multiple human organ-on-a-chip (organ chip) microfluidic devices and their diverse applications for disease modelling, drug development and personalized medicine, as well as the challenges that must be overcome for organ chips to reach their full potenti… ArticlePublished:16th Jun 2023A pair of studies raises ethical and legal questions about the status of lab-grown human embryo models. ArticlePublished:1st Apr 2022In order to move xenotransplants from preclinical work in monkeys to clinical trials in people, the transplant community will need to adjust its definition of success, experts said at #STATBreakthrough. ArticlePublished:19th May 2022In September 2021, the University of Alabama at Birmingham announced the first successful clinical-grade transplant of gene-edited pig kidneys into a human (albeit brain-dead), replacing the recipient’s native kidneys in a display of function. ArticlePublished:27th Jun 2023Co-culture of wild-type human embryonic stem cells with two types of extraembryonic-like cell engineered to overexpress specific transcription factors results in an embryoid model that recapitulates multiple features of the post-implantation human embryo. ArticlePublished:17th Mar 2022Pyramidal cells receiving more connections from nearby cells exhibit stronger and more reliable visual responses. Sample code shows data access and analysis. ArticlePublished:4th Apr 2022The secrets behind the evolution of the human mind might lie within the thousands of tiny “brains” called organoids. Researchers around the world are growing brainlike organs to unravel how people evolved to think. | |||

2.5.1Foundational research | |||