Future Horizons:

10-yearhorizon

AI works with more modalities

25-yearhorizon

AI understands the world through multiple data streams

Multimodal AI is already used in autonomous vehicles to fuse input from cameras, radar and LIDAR, and shows promise in healthcare, where a wide range of physiological signals need to be considered.1920 More recently, large language models repurposed to work with multiple data modalities have shown considerable promise. Photorealistic imagery and video clips can now be generated from simple text descriptions, the latest AI-powered chatbots can perform complex image analysis and robots can combine visual input, motion data and natural-language commands to carry out complex tasks.212223

The ability to draw correlations between different data sources can accelerate learning and help ground the knowledge encoded by language models in the realities of the physical world.2425 It could also transform human-computer interaction by allowing people to communicate with machines in a wide range of mediums. These approaches are data-intensive, though, and while the internet is a goldmine of text and images, finding sufficient training material for other modalities could be a challenge. Robotics is presenting a potential model for overcoming this barrier, though, with research groups pooling resources to create massive open datasets of multimodal training data.2627

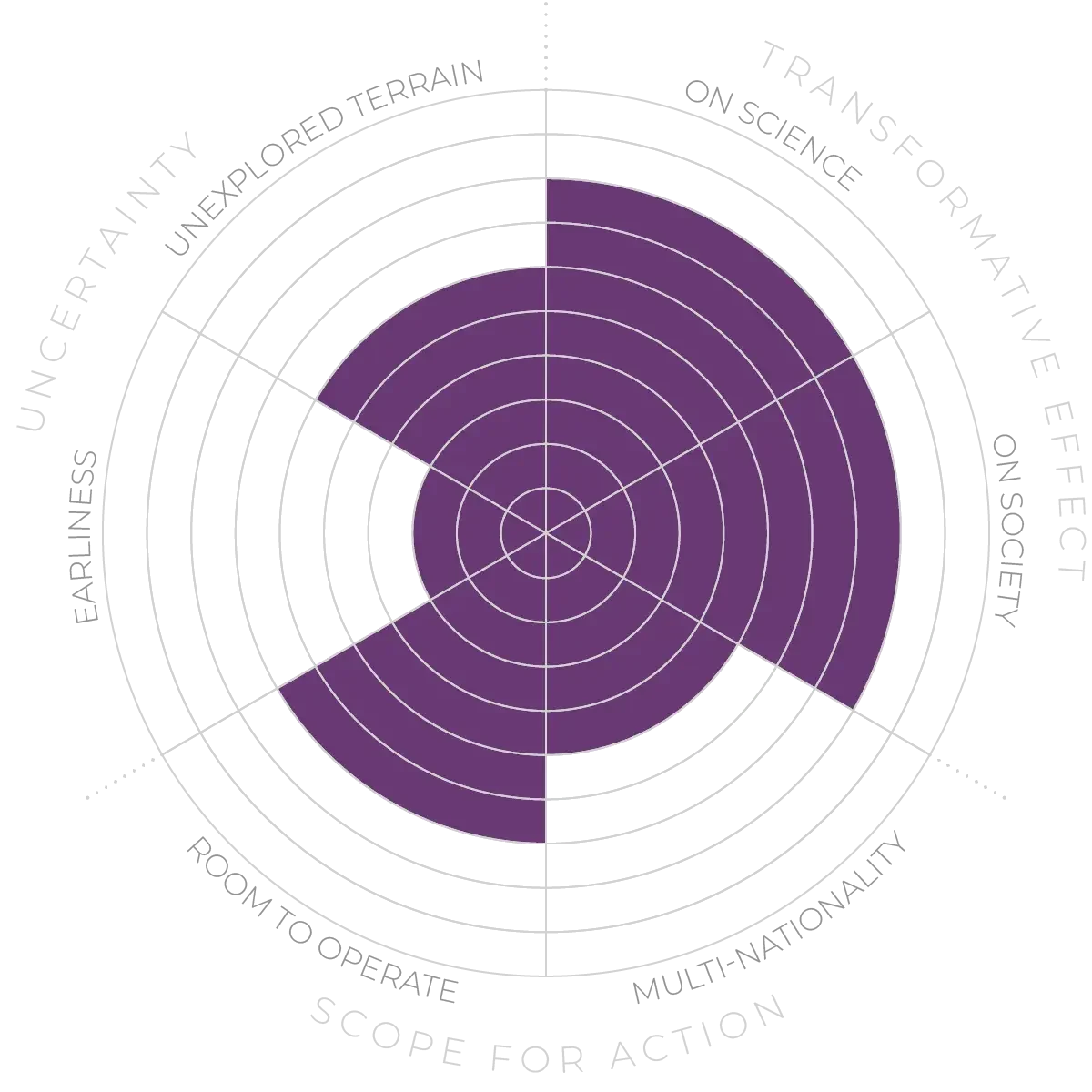

Multimodal AI - Anticipation Scores

The Anticipation Potential of a research field is determined by the capacity for impactful action in the present, considering possible future transformative breakthroughs in a field over a 25-year outlook. A field with a high Anticipation Potential, therefore, combines the potential range of future transformative possibilities engendered by a research area with a wide field of opportunities for action in the present. We asked researchers in the field to anticipate:

- The uncertainty related to future science breakthroughs in the field

- The transformative effect anticipated breakthroughs may have on research and society

- The scope for action in the present in relation to anticipated breakthroughs.

This chart represents a summary of their responses to each of these elements, which when combined, provide the Anticipation Potential for the topic. See methodology for more information.