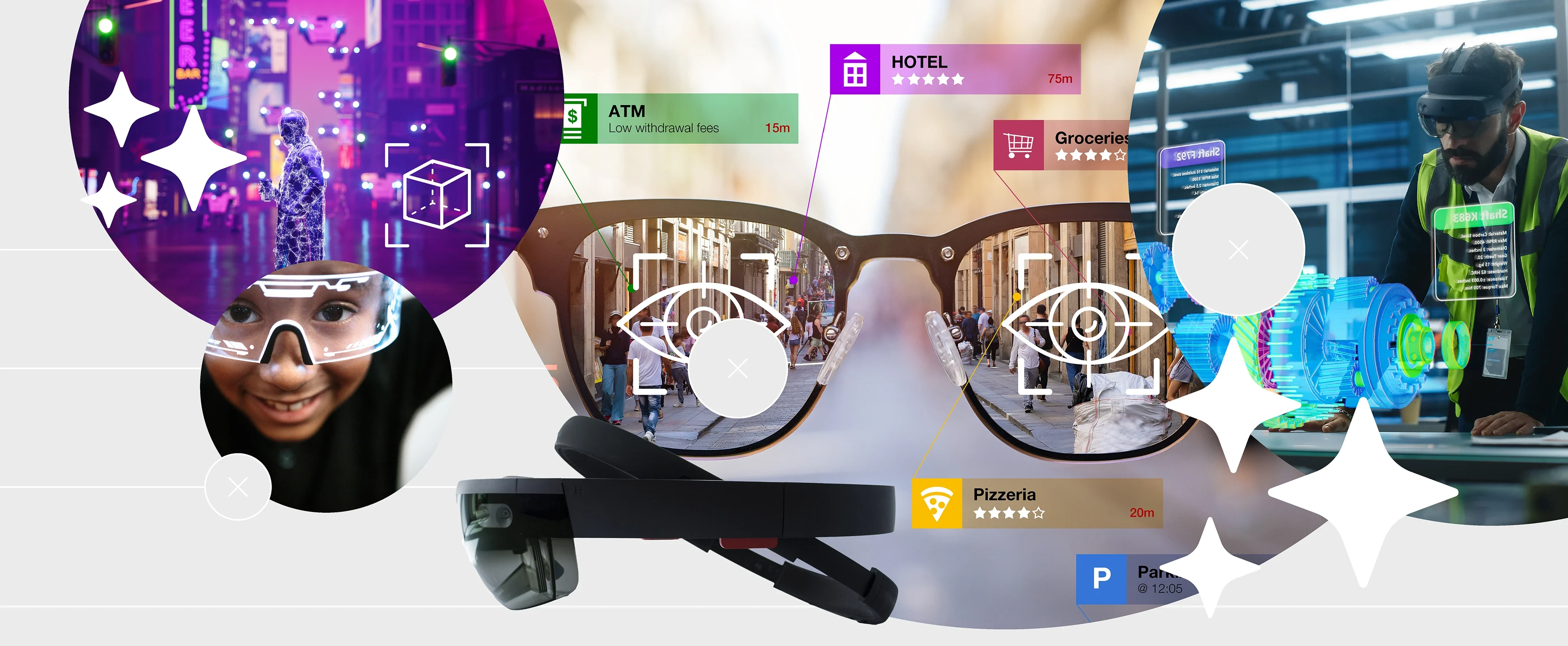

Algorithmic advances now make it possible to use inexpensive cameras and motion sensors to simultaneously track a device’s location and map its surroundings.18 Advanced computer vision can also convert 2D images captured from moving cameras into detailed 3D models of spaces and objects19,20 and use them to reconstruct photorealistic 3D scenes from any point of view,21,22 enabling the virtualisation of entire environments. AI can also layer these 3D models with semantic labels that categorise objects and features within the environment,23 and seamlessly integrate virtual elements into scenes by allowing them to collide with or be occluded by real-world objects.24 Many of these capabilities are now integrated into freely available software development kits from large technology and gaming companies.25,26

However, developing a truly immersive XR experience will require AI models that can go beyond simply mapping and reconstructing 3D environments to understanding and reasoning about them. Fortunately, solving these problems will be crucial for a wide range of AI applications, including self-driving cars, robots and drones, so considerable resources are already going into this problem. Vision-language models trained on large amounts of image and text data appear capable of some degree of spatial reasoning.27,28 But “world models”, which learn rich representations of an environment’s spatial and physical properties to make predictions of how it will evolve over time, could be even more promising.29,30