Future Horizons:

10-yearhorizon

XR becomes globally available

25-yearhorizon

Users’ thoughts directly interface with XR

Many XR headsets today come with handheld controllers, but interaction is becoming increasingly multimodal. On many devices, camera-based gesture recognition and eye-tracking allow users to interact with virtual elements through simple hand movements or by using their visual focus as a cursor. Beyond providing more intuitive control options, the wide range of sensors embedded in leading XR devices can provide powerful insights into the user’s attention, intent and actions.

Machine-learning models can analyse eye-tracking data and ego-centric video captured by XR devices to infer what activity a user is engaged in33,34 or even to infer their intention to interact with both virtual and physical objects.35 Eye-tracking data can also provide a window into the user’s attention and level of engagement.36 When combined with basic physiological monitoring, it can also help to monitor the user’s cognitive load while carrying out tasks in XR.37

By combining these user-centric insights with spatial information, virtual elements and control interfaces can be adapted to the user’s immediate context in real time.38 This can be used to both improve the usability of virtual interfaces39 and overlay helpful information and control options onto real-world objects.40,41 It can even reduce the need for real-world physical interfaces, given the advances in “internet of things” connectivity between XR devices and the physical world. It can also make it possible for XR systems to detect when the user’s ability to interact with specific controls and interfaces is impaired by things like poor lighting, noise or multi-tasking, and adapt accordingly.42

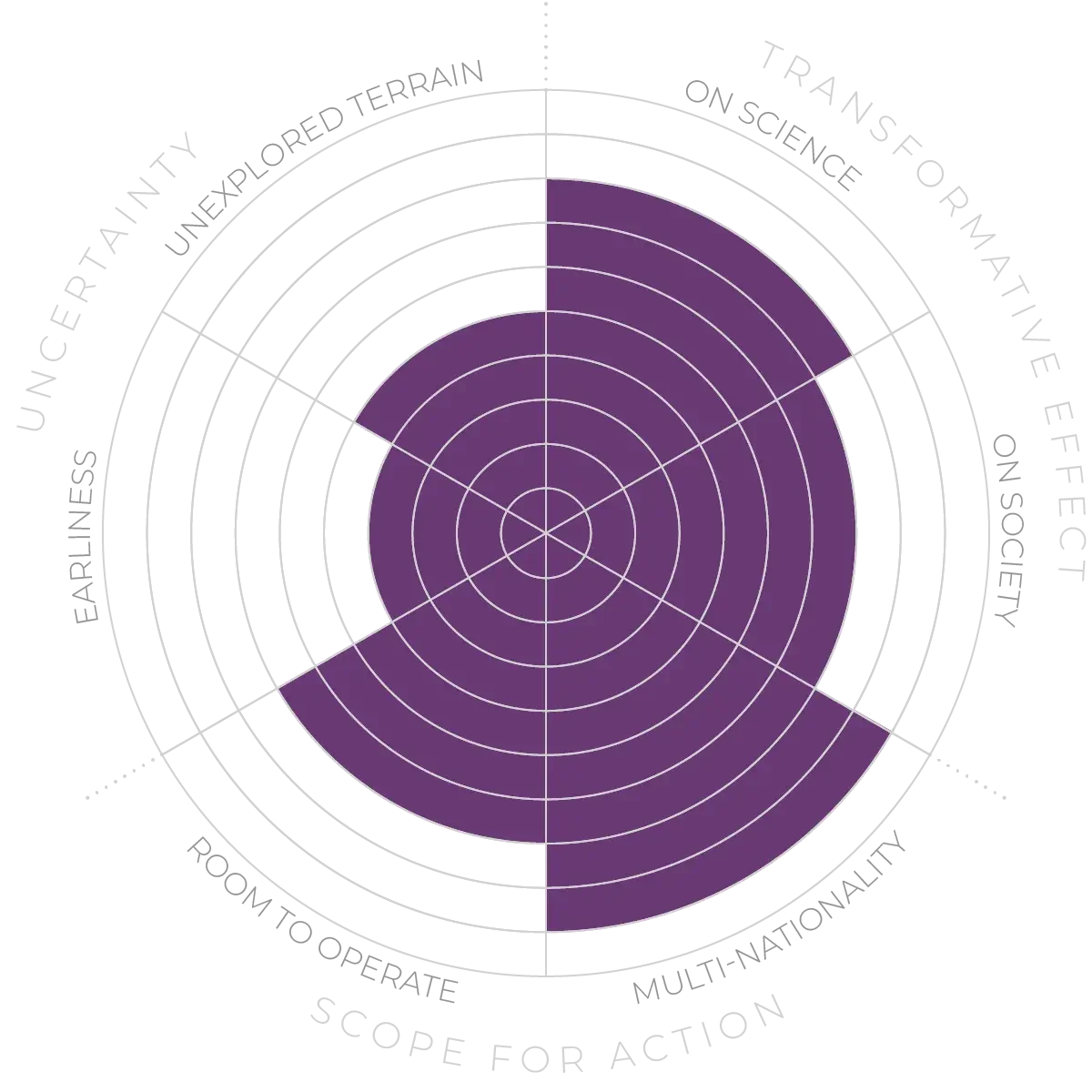

XR interfaces - Anticipation Scores

The Anticipation Potential of a research field is determined by the capacity for impactful action in the present, considering possible future transformative breakthroughs in a field over a 25-year outlook. A field with a high Anticipation Potential, therefore, combines the potential range of future transformative possibilities engendered by a research area with a wide field of opportunities for action in the present. We asked researchers in the field to anticipate:

- The uncertainty related to future science breakthroughs in the field

- The transformative effect anticipated breakthroughs may have on research and society

- The scope for action in the present in relation to anticipated breakthroughs.

This chart represents a summary of their responses to each of these elements, which when combined, provide the Anticipation Potential for the topic. See methodology for more information.