Future Horizons:

10-yearhorizon

Robots start to read human intention

25-yearhorizon

Humans and robots adapt to each other

Humans and robots are able to interact seamlessly and work side by side in most environments. Robots have developed sophisticated social intelligence and self-awareness, able to understand and respond to human emotions, while humans have adapted to the ways in which robotic “cognition” is different from the way humans think.

At the most basic level, there has been progress on “human-aware” navigation algorithms that allow robots to safely occupy the same space as people.56 Collaborative robots, or “cobots”, are also capable of simple interactions like object handovers.57 But more sophisticated forms of human interaction are facilitated by deep reservoirs of implicit knowledge about social etiquette, and efforts to imbue this understanding and corresponding behaviour in machines remain rudimentary.58

LLMs present a promising opportunity to chip away at these challenges by allowing humans to interface with robots through natural language.59There is even tentative evidence that they have limited ability to model the mental states of humans.60 The outputs of these models can be inconsistent and unreliable, though, which makes them unsafe to deploy in many real-world situations.

Understanding how humans interact with robots, both physically and psychologically, and what their expectations of the technology are, is also crucial.61,62,63 Better understanding of how integrating robots into groups of humans impacts social dynamics is also needed.64 There is already evidence that the use of robots in social care can have unintended consequences that harm those they are supposed to help.65 And given the prevalence of bias in AI training data, robots could replicate existing patterns of discrimination.66,67 Developing socially adept robots which feel empathy, exhibit moral reasoning and act accordingly may also require them to be able to feel pain, raising many ethical questions.68 Establishing legal guidelines and frameworks to address the rights and responsibilities of autonomous robots will be required.

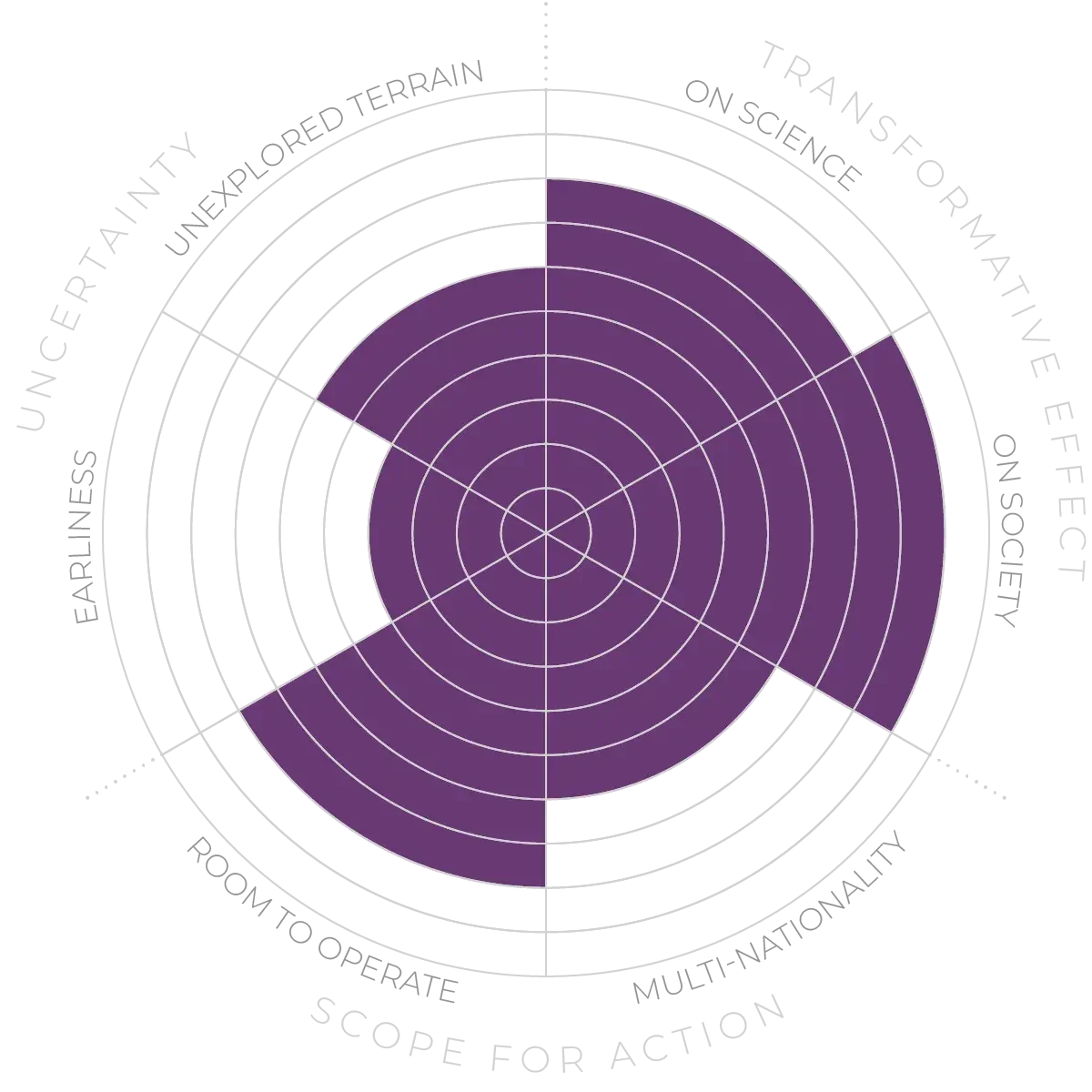

Human-robot interaction - Anticipation Scores

The Anticipation Potential of a research field is determined by the capacity for impactful action in the present, considering possible future transformative breakthroughs in a field over a 25-year outlook. A field with a high Anticipation Potential, therefore, combines the potential range of future transformative possibilities engendered by a research area with a wide field of opportunities for action in the present. We asked researchers in the field to anticipate:

- The uncertainty related to future science breakthroughs in the field

- The transformative effect anticipated breakthroughs may have on research and society

- The scope for action in the present in relation to anticipated breakthroughs.

This chart represents a summary of their responses to each of these elements, which when combined, provide the Anticipation Potential for the topic. See methodology for more information.