Emerging technologies like AI have considerable potential for improving our understanding of language and by extension the evolution of human cognitive capacities.3

Future Horizons:

10-yearhorizon

Machine language becomes ubiquitous

There is widespread evidence for machine-preferred words and constructions in human-generated texts, prompting new approaches to avoid model collapse in future generations of LLMs. Quantised language models, which compress much of the information from LLMs into smaller models, lead to improvements in one-shot learning and generalisation, including between languages.

25-yearhorizon

New machine-language architectures are developed

New architectures that reduce the chances of model collapse improve machine-to-machine communication. Linguistic theories are refined and extended to encompass artificial natural languages. Multimodal AIs allow human-like complex reasoning.

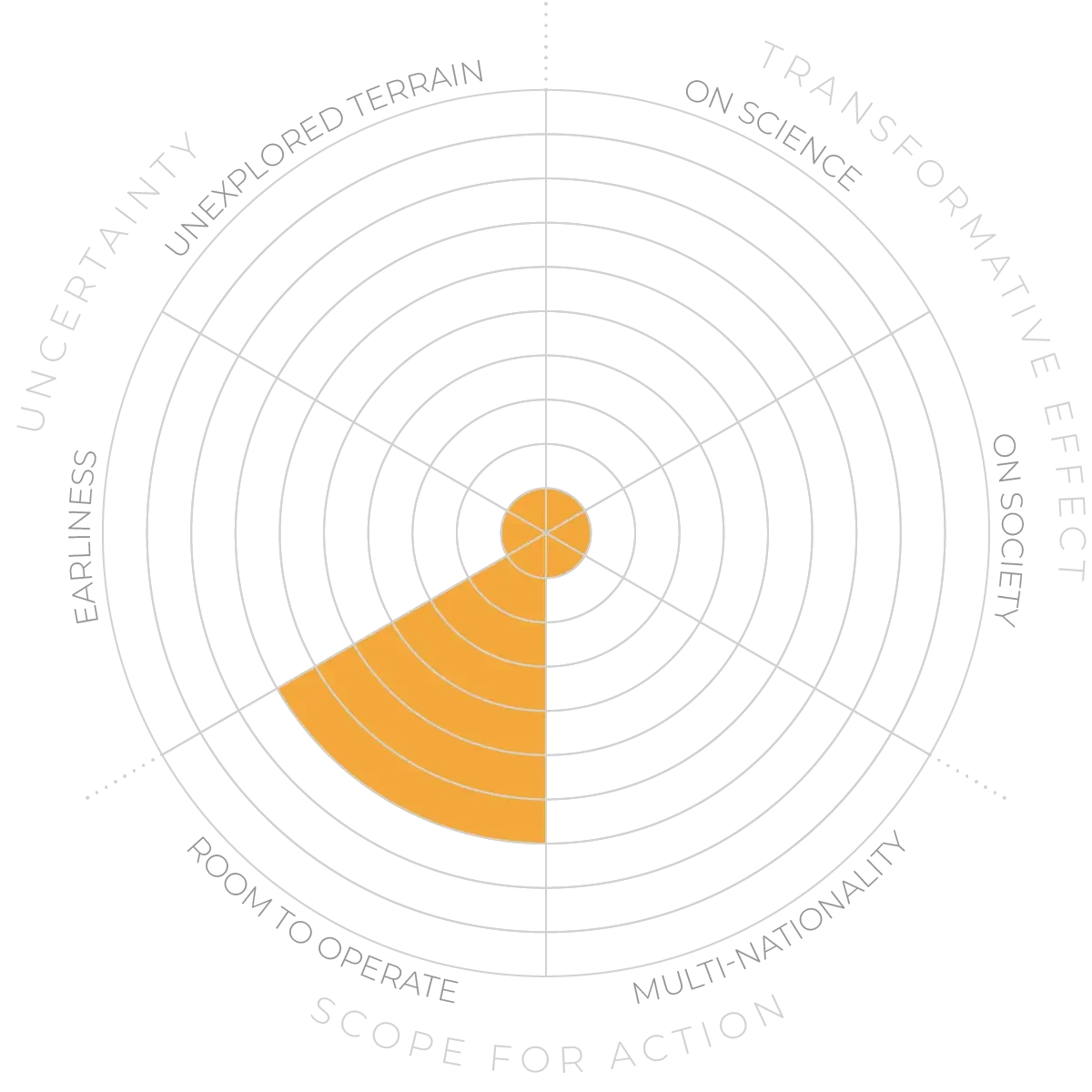

Machines and language - Anticipation Scores

The Anticipation Potential of a research field is determined by the capacity for impactful action in the present, considering possible future transformative breakthroughs in a field over a 25-year outlook. A field with a high Anticipation Potential, therefore, combines the potential range of future transformative possibilities engendered by a research area with a wide field of opportunities for action in the present. We asked researchers in the field to anticipate:

- The uncertainty related to future science breakthroughs in the field

- The transformative effect anticipated breakthroughs may have on research and society

- The scope for action in the present in relation to anticipated breakthroughs.

This chart represents a summary of their responses to each of these elements, which when combined, provide the Anticipation Potential for the topic. See methodology for more information.